When it comes to infrastructure provisioning, including the AWS EKS Cluster, Terraform is the first tool that comes to mind. Learning Terraform is much easier than setting up the infrastructure manually. That said, would you rather use the traditional approach to set up the infrastructure, or would you prefer to use Terraform? More specifically, would you rather create EKS Cluster using Terraform and have Terraform Kubernetes Deployment in place, or use the manual method, leaving room for human errors?

As you may already know, Terraform is an open-source Infrastructure as Code software platform that allows you to manage hundreds of cloud services using a uniform CLI approach and uses declarative configuration files to codify cloud APIs. In this article, we won’t go into all the details of Terraform. Instead, we will be focusing on Terraform Kubernetes Deployment.

In summary, we will be looking at the steps to provision EKS Cluster using Terraform. Also, we will go through how Terraform Kubernetes Deployment helps save time and reduce human errors which can occur when using a traditional or manual approach for application deployment.

Table of contents

- Prerequisites

- Architecture

- Highlights

- Why Provision and Deploy with Terraform?

- How to Create EKS Cluster Using Terraform?

- How to Deploy a Sample Nodejs Application on the EKS Cluster Using Terraform?

- Cleanup the Resources we Created

- Conclusion

- FAQs

Prerequisites for Terraform Kubernetes Deployment

Before we proceed and provision EKS Cluster using Terraform, there are a few commands or tools you need to have in mind and on hand. First off, you must have an AWS Account, and Terraform must be installed on your host machine, seeing as we are going to create EKS Cluster using Terraform CLI on the AWS cloud.

Now, let’s take a look at the prerequisites for this setup and help you install them.

1. AWS Account: If you don’t have an AWS account, you can register for a Free Tier Account and use it for test purposes. Click here to learn more about the Free Tier AWS Account or create one if you don’t already have one.

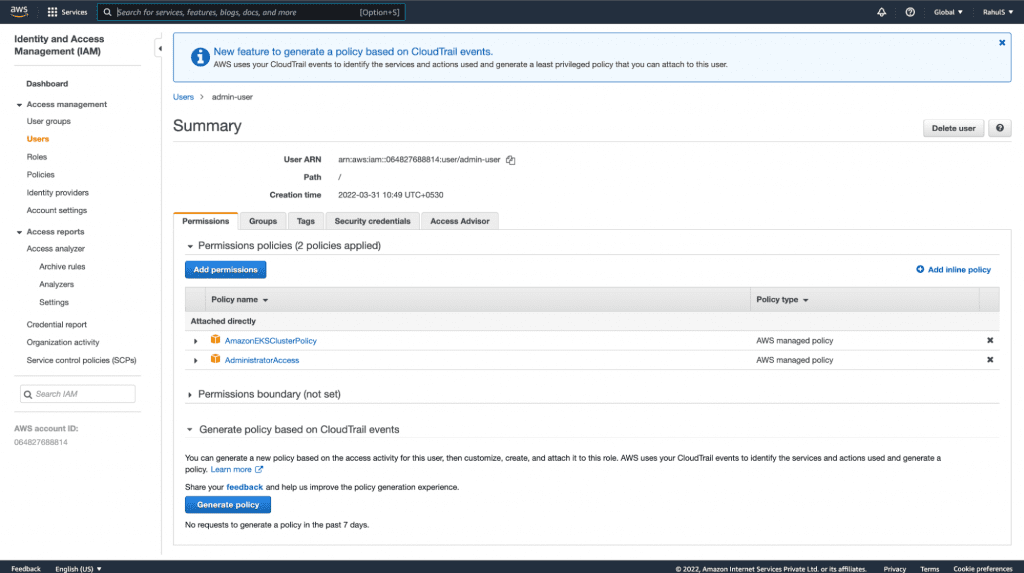

2. IAM Admin User: You must have an IAM user with AmazonEKSClusterPolicy and AdministratorAccess permissions as well as its secret and access keys. We will be using the IAM user credentials to provision EKS Cluster using Terraform. Click here to learn more about the AWS IAM Service. The keys that you create for this user will be used to connect to the AWS account from the CLI (Command Line Interface).

When working on production clusters, only provide the required access and avoid providing admin privileges.

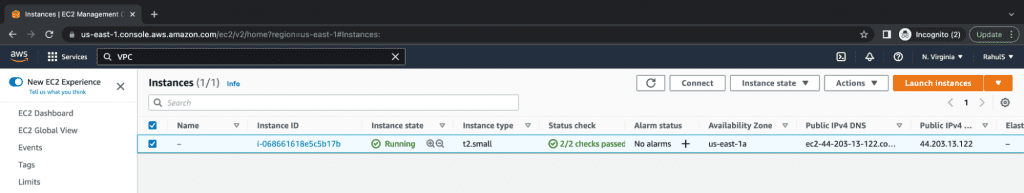

3. EC2 Instance: We will be using Ubuntu 18.04 EC2 Instance as a host machine to execute our Terraform code. You may use another machine, however, you will need to verify which commands are compatible with your host machine in order to install the required packages. Click here to learn more about the AWS EC2 service. The first step is installing the required packages on your machine. You can also use your personal computer to install the required tools. This step is optional.

4. Access to the Host Machine: Connect to the EC2 Instance and Install the Unzip package.

a. ssh -i “<key-name.pem>” ubuntu@<public-ip-of-the–ec2-instance>

If you are using your personal computer, you may not need to connect to the EC2 instance, however, in this case, the installation command will differ.

b. sudo apt-get update -y

c. sudo apt-get install unzip -y

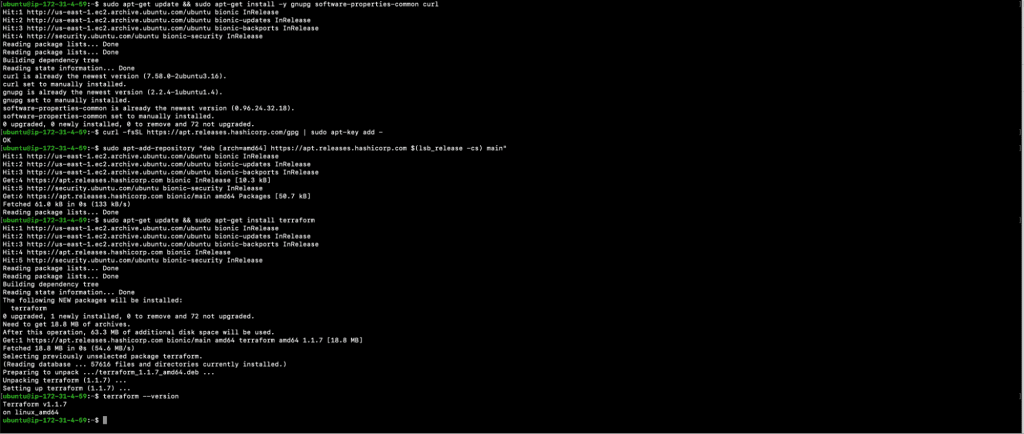

5. Terraform: To create EKS Cluster using Terraform, you need to have Terraform on your Host machine. Use the following commands to install Terraform on an Ubuntu 18.04 EC2 machine. Click here to view the installation instructions for other platforms.

a. sudo apt-get update && sudo apt-get install -y gnupg software-properties-common curl

b. curl -fsSL https://apt.releases.hashicorp.com/gpg | sudo apt-key add –

c. sudo apt-add-repository “deb [arch=amd64] https://apt.releases.hashicorp.com $(lsb_release -cs) main”

d. sudo apt-get update && sudo apt-get install terraform

e. terraform –version

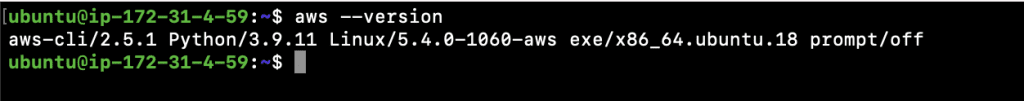

6. AWS CLI: There is not much to do with aws-cli, however, we need to use it to check the details about the IAM user whose credentials will be used from the terminal. To install it, use the commands below. Click here to view the installation instructions if you are using another platform.

a. curl “https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip” -o “awscliv2.zip”

b. unzip awscliv2.zip

c. sudo ./aws/install

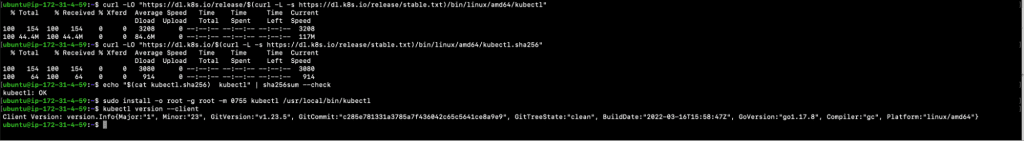

7. Kubectl: We will be using the kubectl command against the Kubernetes Cluster to view the resources in the EKS Cluster that we want to create. Install kubectl on Ubuntu 18.04 EC2 machine using the commands below. Click here to view different installation methods for installing kubectl on different platforms.

a. curl -LO “https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl”

b. url -LO “https://dl.k8s.io/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl.sha256″

c. echo “$(cat kubectl.sha256) kubectl” | sha256sum –check

d. sudo install -o root -g root -m 0755 kubectl /usr/local/bin/kubectl

e. kubectl version –client

8. DOT: This step is completely optional. We will be using this to convert the output of the Terraform graph command. The output of the Terraform graph command is in DOT format, which can easily be converted into an image by making use of the DOT provided by GraphViz. The Terraform graph command is used to generate a visual representation of a configuration or execution plan.

To install the DOT command, execute the command below.

a. sudo apt install graphviz

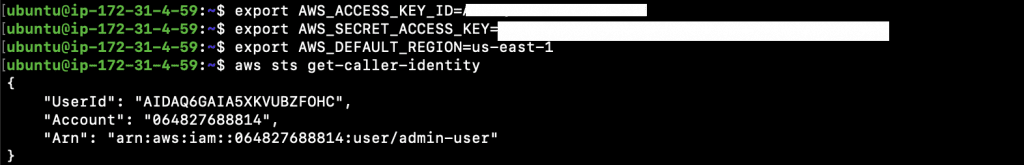

9. Export your AWS Access and Secret Keys for the current session. If the session expires, you will need to export the keys again on the terminal. There are other ways to use your keys that allow aws-cli to interact with AWS. Click here to learn more.

a. export AWS_ACCESS_KEY_ID=<YOUR_AWS_ACCESS_KEY_ID>

b. xport AWS_SECRET_ACCESS_KEY=<YOUR_AWS_SECRET_ACCESS_KEY>

c. export AWS_DEFAULT_REGION=<YOUR_AWS_DEFAULT_REGION>

Here, replace <YOUR_AWS_ACCESS_KEY_ID> with your access key, <YOUR_AWS_SECRET_ACCESS_KEY> with your secret key and <YOUR_AWS_DEFAULT_REGION> with the default region for your aws-cli.

10. Check the details of the IAM user whose credentials are being used. Basically, this will display the details of the user whose keys you used to configure the CLI in the above step.

a. aws sts get-caller-identity

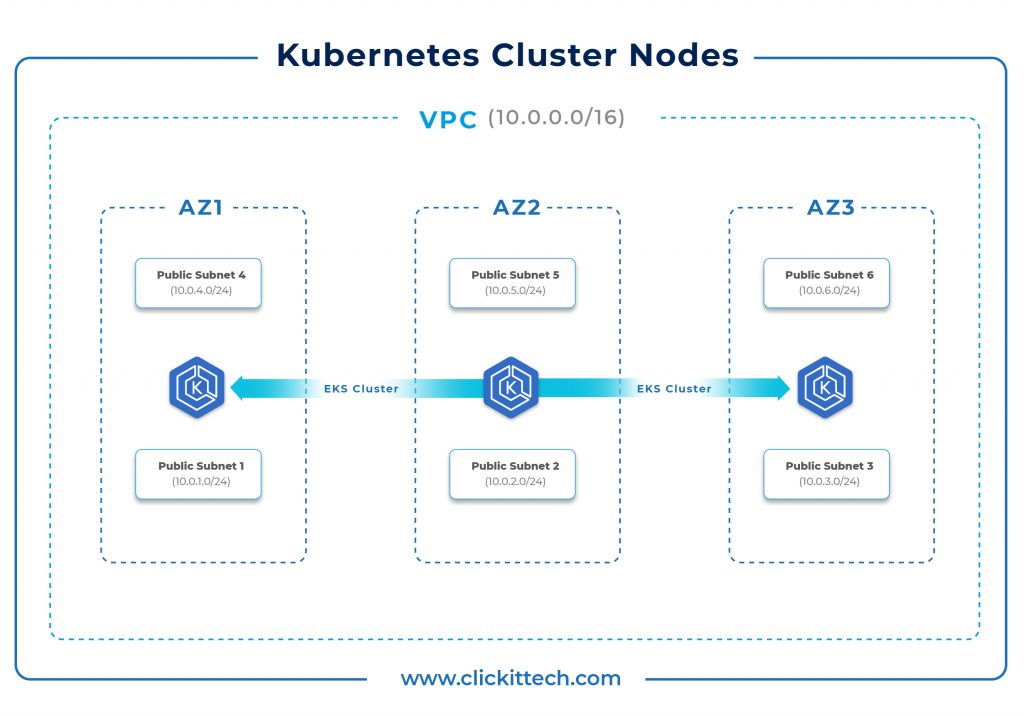

Architecture

The architecture should appear as follows.

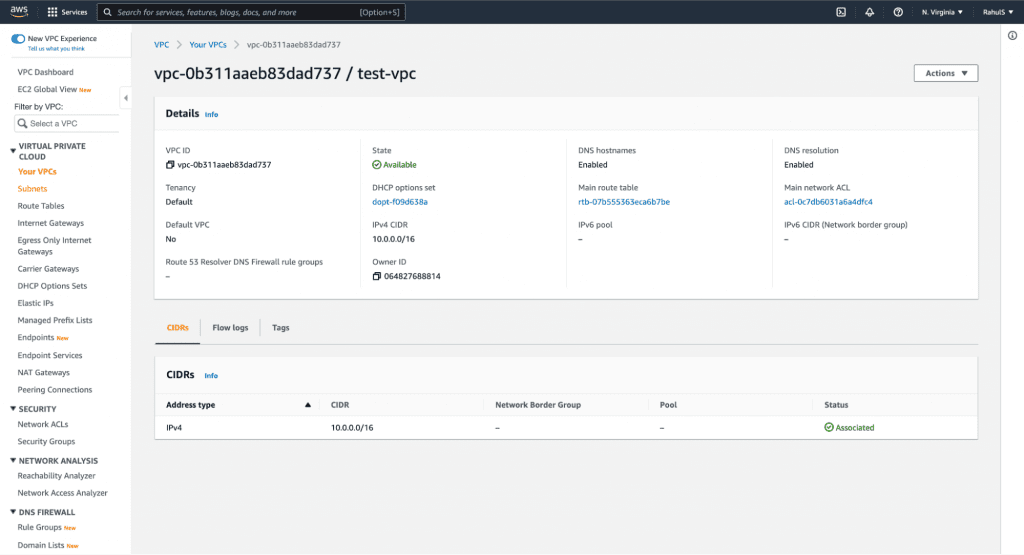

A VPC will be created with three Public Subnets and three Private Subnets. Traffic from Private Subnets will route through the NAT Gateway and traffic from Public Subnets will route through the Internet Gateway.

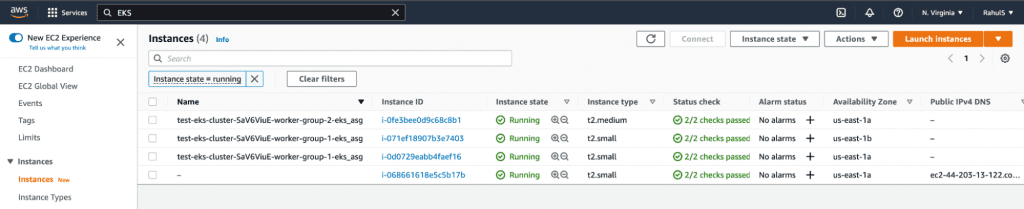

Kubernetes Cluster Nodes will be created as part of Auto-Scaling groups and will reside in Private Subnets. Public Subnets can be used to create Bastion Servers that can be used to connect to Private Nodes.

Three Public Subnets and three Private Subnets will be created in three different Availability Zones.

You can change the VPC CIDR in the Terraform configuration files if you wish. If you are just getting started, we recommend following the blog without making any unfamiliar changes to the configuration in order to avoid human errors.

Highlights

This blog will help you provision EKS Cluster using Terraform and deploy a sample NodeJs application. When creating an EKS Cluster, other AWS resources such as VPC, Subnets, NAT Gateway, Internet Gateway, and Security Groups will also be created on your AWS account. This blog is divided into two parts:

- Creation of an EKS Cluster using Terraform; and

- Deployment of a sample Nodejs application in the EKS Cluster using Terraform.

You may also like 5 Reasons Why You Should Choose Node.js for App Development.

First off, we will create an EKS Cluster, after which we will deploy a sample Nodejs application on it using Terraform. In this blog, we refer to Terraform Modules for creating VPC, and its components, along with the EKS Cluster.

Here is a list of some of the Terraform elements we’ll use.

- Module:

A module is made up of a group of.tf and/or.tf.json files contained in a directory. Modules are containers for a variety of resources that are used in conjunction. Terraform’s main method of packaging and reusing resource configurations is through modules.

1.1. EKS:

We will be using a “terraform-aws-modules/eks/aws” module to create our EKS Cluster and its components.

1.2. VPC:

We will be using a “terraform-aws-modules/vpc/aws” module to create our VPC and its components. - Data:

The Data Source is accessed by a data resource, which is specified using a data block and allows Terraform to use the information defined outside of Terraform, defined within another Terraform configuration, or changed by functions. - Provider:

Provider is a Terraform plugin that allows users to communicate with cloud providers, SaaS providers, and other APIs. Terraform configurations must specify the required providers in order for Terraform to install and use them.

3.1. Kubernetes:

The Kubernetes provider is used to communicate with Kubernetes’ resources. Before the provider can be utilized, it must be configured with appropriate credentials. For this example, we will use host, token, and cluster_ca_certificate in the provider block.

3.2. AWS:

The AWS provider is used to communicate with AWS’ resources. Before the provider can be utilized, it must be configured with appropriate credentials. In this case, we will export AWS access and secret keys to the terminal.

- Resource:

The most crucial element of the Terraform language is resources. Each resource block describes one or more infrastructure items. A resource block specifies a particular kind of resource with a specific local name. The resource type and name serve to identify a resource and therefore must be distinct inside a module.

4.1. kubernetes_namespace:

We will be using kubernetes_namespace to create namespaces in our EKS Cluster.

4.2. kubernetes_deployment:

We will be using kubernetes_deployment to create deployments in our EKS Cluster.

4.3. kubernetes_service:

We will be using kubernetes_service to create services in our EKS Cluster.

4.4. aws_security_group:

We will be using aws_security_group to create multiple security groups for instances that will be created as part of our EKS Cluster.

4.5. random_string:

Random string is a resource that generates a random permutation of alphanumeric and special characters. We will be creating a random string that will be used as a suffix in our EKS Cluster name. - Output:

Output values provide information about your infrastructure to other Terraform setups and make said information available on the command line. In computer languages, output values are equivalent to return values. An output block must be used to declare each output value that a module exports. - Required_version:

The required_version parameter accepts a version constraint string that determines which Terraform versions are compatible with your setup. Only the Terraform CLI version is affected by the required version parameter. - Variable:

Terraform variables, including variables in any other programming language, allow you to tweak the characteristics of Terraform modules without modifying the module’s source code. This makes your modules composable and reusable by allowing you to exchange modules across multiple Terraform settings. Variables can include past values which can be utilized when calling the module or running Terraform. - Locals:

A local value gives an expression a name, allowing you to use it numerous times within a module without having to repeat it.

Before we go ahead and create EKS Cluster using Terraform, let’s take a look at why Terraform is a good choice.

Why Provision and Deploy with Terraform?

It’s normal to wonder “why provision EKS Cluster using Terraform” or “why create EKS Cluster using Terraform,” when we can simply achieve the same with the AWS Console or AWS CLI or other tools. Here are a few of the reasons why:

- Unified Workflow: If you’re already using Terraform to deploy infrastructure to AWS, your EKS Cluster can be integrated into that process. Also, Terraform can be used to deploy applications into your EKS Cluster.

- Full Lifecycle Management: Terraform not only creates resources, but it also updates and deletes tracked resources without you having to inspect the API to find those resources.

- Graph of Relationships: Terraform recognizes resource-dependent relationships via a relationship graph. If, for example, an AWS Kubernetes Cluster requires specified VPC and subnet configurations, Terraform will not attempt to create the cluster if the VPC and subnets fail to create it with the required configuration.

How to Create EKS Cluster Using Terraform?

In this part of the blog, we shall provision EKS Cluster using Terraform. While doing this, other dependent resources like VPC, Subnets, NAT Gateway, Internet Gateway, and Security Groups will also be created, and we will also deploy an Nginx application with Terraform.

Note: You can find all of the relevant code on my Github Repository. Before you create EKS Cluster with Terraform using the following steps, you need to set up and make note of a few things.

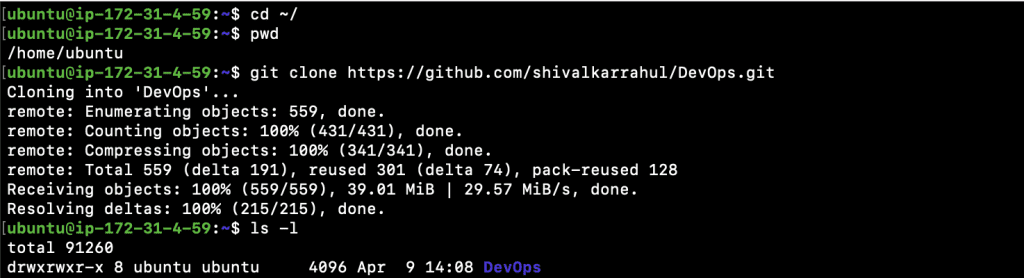

- Clone the Github Repository in your home directory.

1.1. cd ~/

1.2. pwd

1.3. git clone https://github.com/shivalkarrahul/DevOps.git

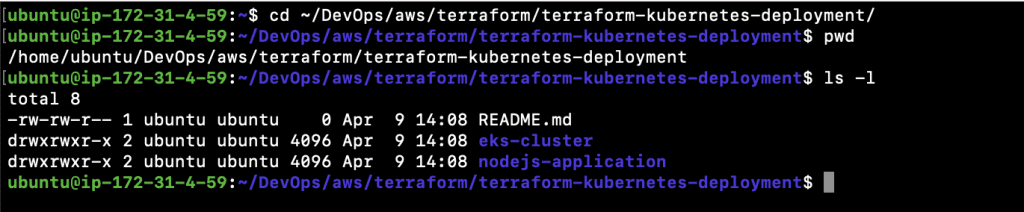

- After you clone the repo, change your “Present Working Directory” to ~/DevOps/aws/terraform/terraform-kubernetes-deployment/

2.1. cd ~/DevOps/aws/terraform/terraform-kubernetes-deployment/

- The Terraform files for creating an EKS Cluster must be in one folder and the Terraform files for deploying a sample NodeJs application must be in another.

3.1. eks-cluster

This folder contains all of the .tf files required for creating the EKS Cluster.

3.2. nodejs-application

This folder contains a .tf file required for deploying the sample NodeJs application.

Now, let’s proceed with the creation of an EKS Cluster using Terraform.

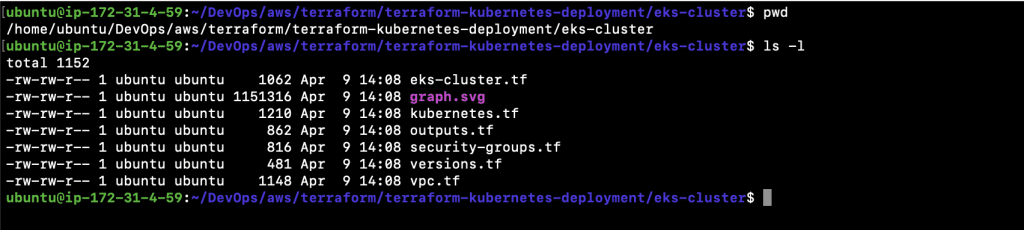

- Verify your “Present Working Directory”, it should be ~/DevOps/aws/terraform/terraform-kubernetes-deployment/ as you have already cloned my Github Repository in the previous step.

1.1. cd ~/DevOps/aws/terraform/terraform-kubernetes-deployment/ - Next, change your “Present Working Directory” to ~/DevOps/aws/terraform/terraform-kubernetes-deployment/eks-cluster

2.1. cd ~/DevOps/aws/terraform/terraform-kubernetes-deployment/eks-cluster - You should now have all the required files.

- If you haven’t cloned the repo then you are free to create the required .tf files in a new folder.

- Create your eks-cluster.tf file with the content below. In this case, we are using the module from terraform-aws-modules/eks/aws to create our EKS Cluster. Let’s take a look at the most important inputs that you may want to consider changing depending on your requirements.

5.1. source : This informs Terraform of the location of the source code for the module.

5.2. version: It is recommended to explicitly specify the acceptable version number to avoid unexpected or unwanted changes.

5.3. cluster_name : Cluster Name is passed from the local variable.

5.4. cluster_version : This defines the EKS Cluster version.

5.5. subnets : This specifies the list of Subnets in which nodes will be created. For this example, we will be creating nodes in the Private Subnets. Subnet IDs are passed here from the module in which Subnets are created.

5.6. cpc_id : This specifies the VPI in which the EKS Cluster will be created. The value is passed from the module in which VPC is created.

5.7. instance_type : You can change this value if you want to create worker nodes with any other instance type.

5.8. asg_desired_capacity: Here, you can specify the maximum number of nodes that you want in your auto-scaling worker node groups.

| module “eks” { source = “terraform-aws-modules/eks/aws” version = “17.24.0” cluster_name = local.cluster_name cluster_version = “1.20” subnets = module.vpc.private_subnets vpc_id = module.vpc.vpc_id workers_group_defaults = { root_volume_type = “gp2” } worker_groups = [ { name = “worker-group-1” instance_type = “t2.small” additional_userdata = “echo nothing” additional_security_group_ids = [aws_security_group.worker_group_mgmt_one.id] asg_desired_capacity = 2 }, { name = “worker-group-2” instance_type = “t2.medium” additional_userdata = “echo nothing” additional_security_group_ids = [aws_security_group.worker_group_mgmt_two.id] asg_desired_capacity = 1 }, ] } data “aws_eks_cluster” “cluster” { name = module.eks.cluster_id } data “aws_eks_cluster_auth” “cluster” { name = module.eks.cluster_id } |

- Create a kubernetes.tf file with the following content. Here, we are using Terraform Kubernetes Provider in order to create Kubernetes objects such as a Namespace, Deployment, and Service using Terraform. We are creating these resources for testing purposes only. In the following steps, we will also be deploying a sample application using Terraform. Here are all the resources we will be creating in the EKS Cluster.

6.1. kubernetes_namespace : We will be creating a “test” namespace and using this namespace to create other Kubernetes objects in the EKS Cluster.

6.2. kubernetes_deployment : We will be creating a deployment with two replicas of Nginx Pod.

6.3. kubernetes_service : A service of type LoadBalancer will be created and used to access our Nginx application.

| provider “kubernetes” { host = data.aws_eks_cluster.cluster.endpoint token = data.aws_eks_cluster_auth.cluster.token cluster_ca_certificate = base64decode(data.aws_eks_cluster.cluster.certificate_authority.0.data) } resource “kubernetes_namespace” “test” { metadata { name = “nginx” } } resource “kubernetes_deployment” “test” { metadata { name = “nginx” namespace = kubernetes_namespace.test.metadata.0.name } spec { replicas = 2 selector { match_labels = { app = “MyTestApp” } } template { metadata { labels = { app = “MyTestApp” } } spec { container { image = “nginx” name = “nginx-container” port { container_port = 80 } } } } } } resource “kubernetes_service” “test” { metadata { name = “nginx” namespace = kubernetes_namespace.test.metadata.0.name } spec { selector = { app = kubernetes_deployment.test.spec.0.template.0.metadata.0.labels.app } type = “LoadBalancer” port { port = 80 target_port = 80 } } } |

- Create an outputs.tf file with the following content. This is done to export the structured data related to our resources. It will provide information about our EKS infrastructure to other Terraform setups. Output values are equivalent to return values in other programming languages.

| output “cluster_id” { description = “EKS cluster ID.” value = module.eks.cluster_id } output “cluster_endpoint” { description = “Endpoint for EKS control plane.” value = module.eks.cluster_endpoint } output “cluster_security_group_id” { description = “Security group ids attached to the cluster control plane.” value = module.eks.cluster_security_group_id } output “kubectl_config” { description = “kubectl config as generated by the module.” value = module.eks.kubeconfig } output “config_map_aws_auth” { description = “A kubernetes configuration to authenticate to this EKS cluster.” value = module.eks.config_map_aws_auth } output “region” { description = “AWS region” value = var.region } output “cluster_name” { description = “Kubernetes Cluster Name” value = local.cluster_name } |

- Create a security-groups.tf file with the following content. In this case, we are defining the security groups which will be used by our worker nodes.

| resource “aws_security_group” “worker_group_mgmt_one” { name_prefix = “worker_group_mgmt_one” vpc_id = module.vpc.vpc_id ingress { from_port = 22 to_port = 22 protocol = “tcp” cidr_blocks = [ “10.0.0.0/8”, ] } } resource “aws_security_group” “worker_group_mgmt_two” { name_prefix = “worker_group_mgmt_two” vpc_id = module.vpc.vpc_id ingress { from_port = 22 to_port = 22 protocol = “tcp” cidr_blocks = [ “192.168.0.0/16”, ] } } resource “aws_security_group” “all_worker_mgmt” { name_prefix = “all_worker_management” vpc_id = module.vpc.vpc_id ingress { from_port = 22 to_port = 22 protocol = “tcp” cidr_blocks = [ “10.0.0.0/8”, “172.16.0.0/12”, “192.168.0.0/16”, ] } } |

- Create a versions.tf file with the following content. Here, we are defining version constraints along with a provider as AWS.

| terraform { required_providers { aws = { source = “hashicorp/aws” version = “>= 3.20.0” } random = { source = “hashicorp/random” version = “3.1.0” } local = { source = “hashicorp/local” version = “2.1.0” } null = { source = “hashicorp/null” version = “3.1.0” } kubernetes = { source = “hashicorp/kubernetes” version = “>= 2.0.1” } } required_version = “>= 0.14” } |

- Create a vpc.tf file with the following content. For the purposes of our example, we have defined a 10.0.0.0/16 CIDR for a new VPC that will be created and used by the EKS Cluster. We will also be creating Public and Private Subnets. To create a VPC, we will be using the terraform-aws-modules/vpc/aws module. All of our resources will be created in the us-east-1 region. If you want to use any other region for creating the EKS Cluster, all you need to do is change the value assigned to the “region” variable.

| variable “region” { default = “us-east-1” description = “AWS region” } provider “aws” { region = var.region } data “aws_availability_zones” “available” {} locals { cluster_name = “test-eks-cluster-${random_string.suffix.result}” } resource “random_string” “suffix” { length = 8 special = false } module “vpc” { source = “terraform-aws-modules/vpc/aws” version = “3.2.0” name = “test-vpc” cidr = “10.0.0.0/16” azs = data.aws_availability_zones.available.names private_subnets = [“10.0.1.0/24”, “10.0.2.0/24”, “10.0.3.0/24”] public_subnets = [“10.0.4.0/24”, “10.0.5.0/24”, “10.0.6.0/24”] enable_nat_gateway = true single_nat_gateway = true enable_dns_hostnames = true tags = { “kubernetes.io/cluster/${local.cluster_name}” = “shared” } public_subnet_tags = { “kubernetes.io/cluster/${local.cluster_name}” = “shared” “kubernetes.io/role/elb” = “1” } private_subnet_tags = { “kubernetes.io/cluster/${local.cluster_name}” = “shared” “kubernetes.io/role/internal-elb” = “1” } } |

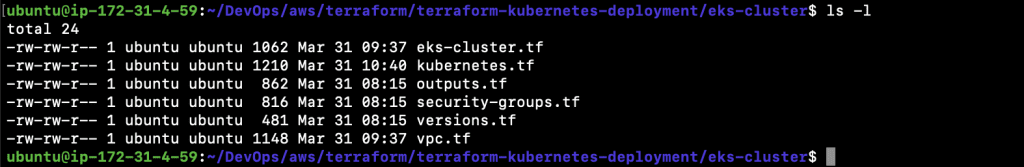

- At this point, you should have six files in your current directory (as can be seen below). All of these files should be in one directory. In the next step, you will learn to deploy a sample NodeJs application and to do so, you will need to create a new folder. That said, if you have cloned the repo herein, you won’t need to worry.

11.1. eks-cluster.tf

11.2. kubernetes.tf

11.3. outputs.tf

11.4. security-groups.tf

11.5. versions.tf

11.6. vpc.tf

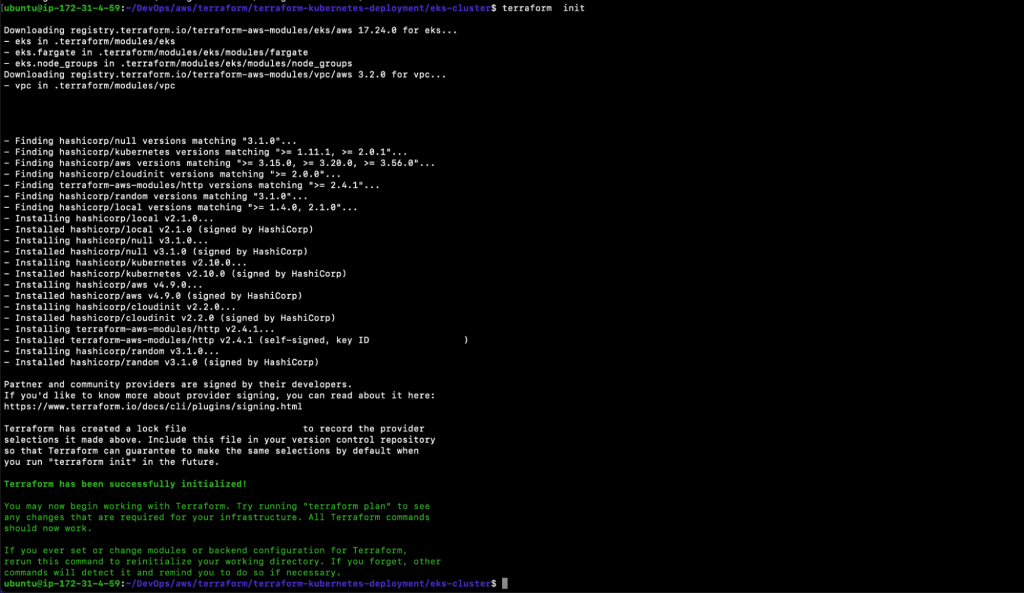

- To create an EKS Cluster, execute the following command to initialize the current working directory containing the Terraform configuration files (.tf files ).

12.1. terraform init

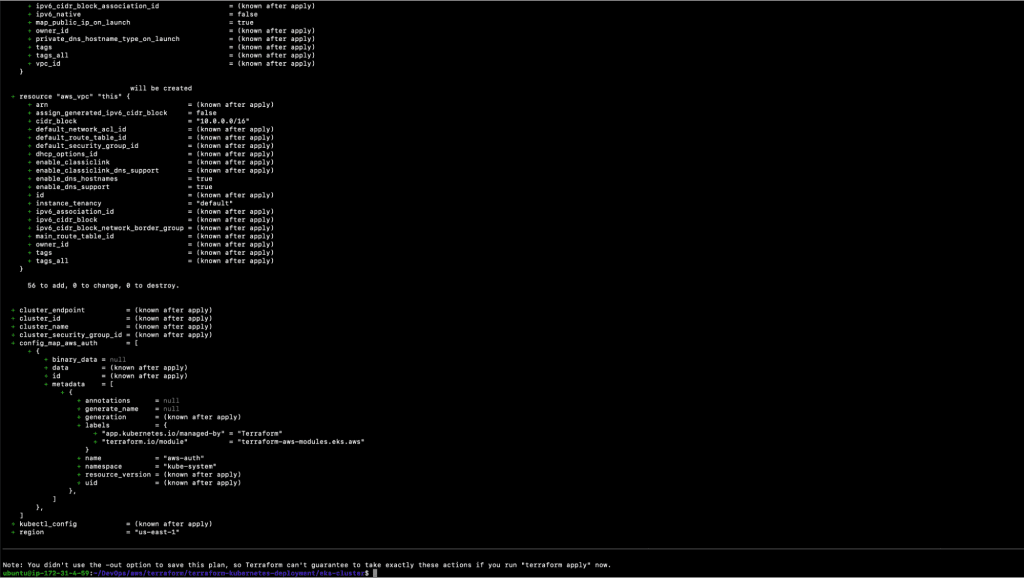

- Execute the following command to determine the desired state of all the resources defined in the above .tf files.

13.1. terraform plan

- Before we go ahead and create our EKS Cluster, let’s take a look at the “terraform graph” command. This is an optional step that you can skip if you wish. Basically, we are simply generating a visual representation of our execution plan. Once you execute the following command, you will get the output in a graph.svg file. You can try to open the file on your personal computer or online with SVG Viewer.

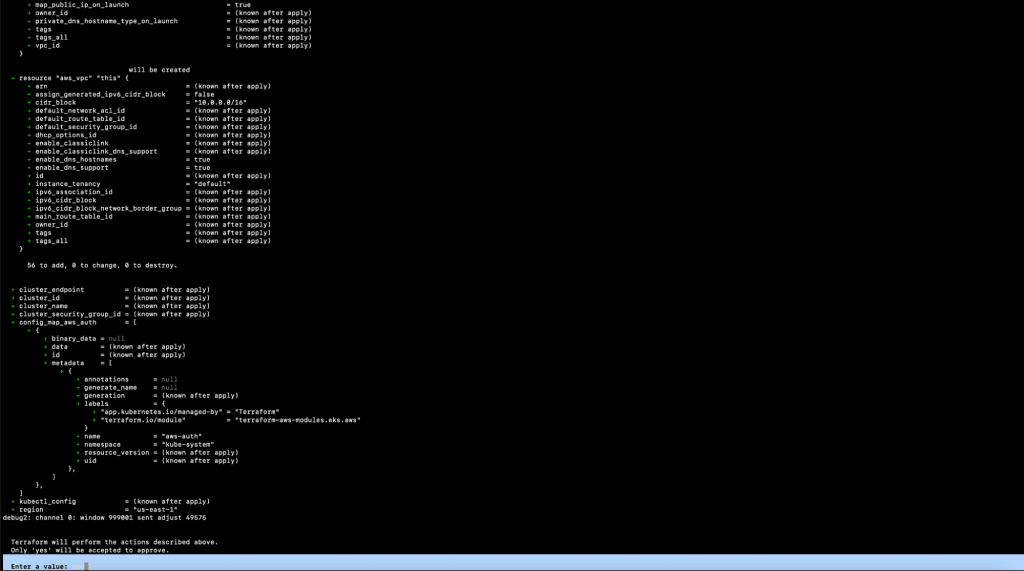

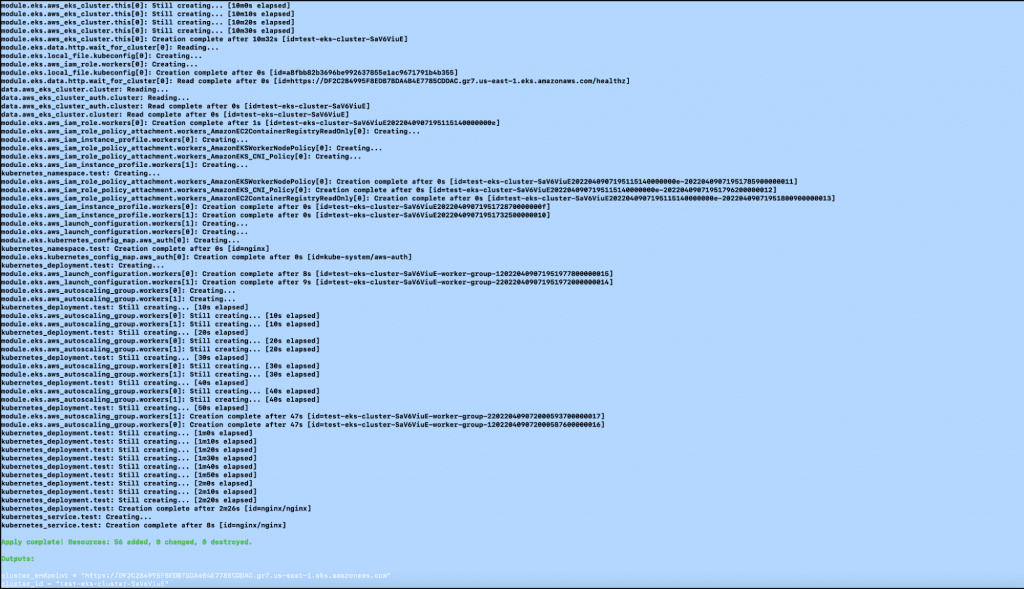

14.1. terraform graph -type plan | dot -Tsvg > graph.svg - We must now create the resources using the .tf files in our current working directory. Execute the following command. This will take around 10 minutes to complete, therefore feel free to go have a cup of coffee. Once the command is successfully completed, the EKS Cluster will be ready.

15.1. terraform apply

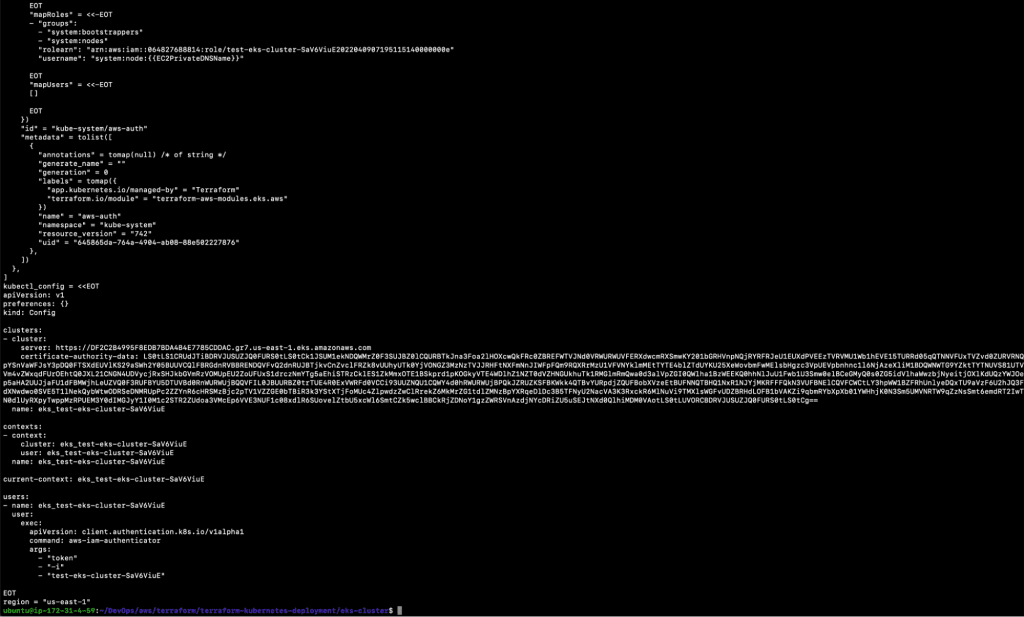

16. After the “terraform apply” command is successfully completed, you should see the output as depicted below.

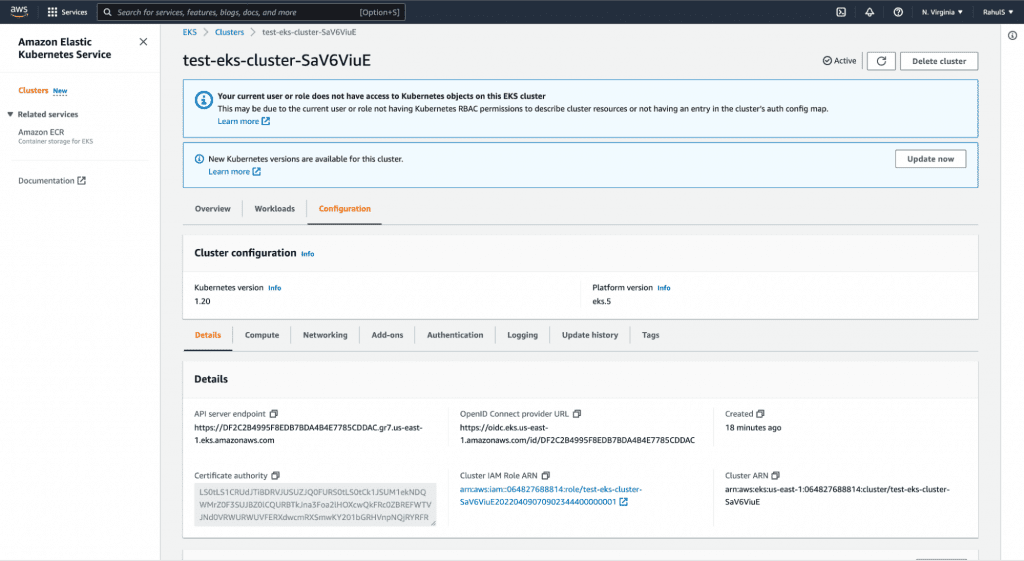

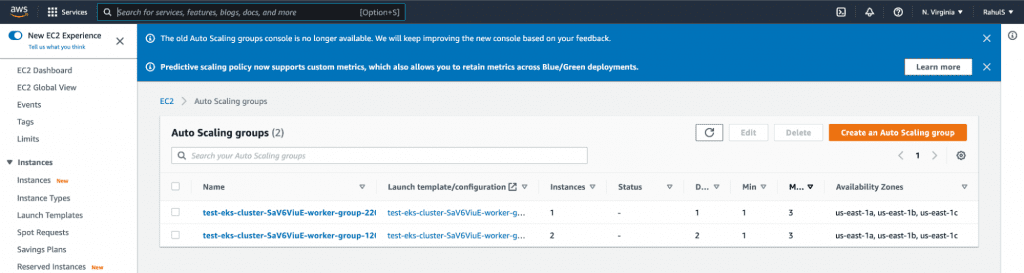

17. You can now go to the AWS Console and verify the resources created as part of the EKS Cluster.

17.1. EKS Cluster

You can check for other resources in the same way.

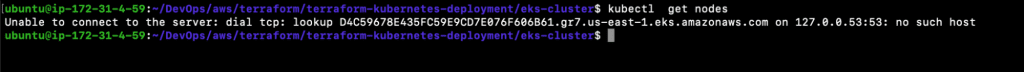

18. Now, if you try to use the kubectl command to connect to the EKS Cluster and control it, you will get an error seeing as you have the kubeconfig file being used for authentication purposes.

18.1. kubectl get nodes

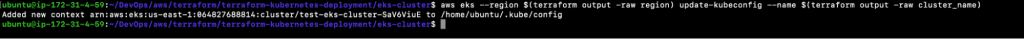

- To resolve the error mentioned above, retrieve the access credentials, i.e. update the ~/.kube/config file for the cluster and automatically configure kubectl so that you can connect to the EKS Cluster using the kubectl command. Execute the following command from the directory where all your.tf files used to create the EKS Cluster are located.

19.1. aws eks –region $(terraform output -raw region) update-kubeconfig –name $(terraform output -raw cluster_name)

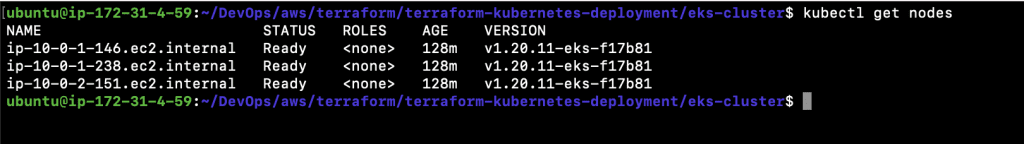

- Now, you are ready to connect to your EKS Cluster and check the nodes in the Kubernetes Cluster using the following command:

20.1. kubectl get nodes

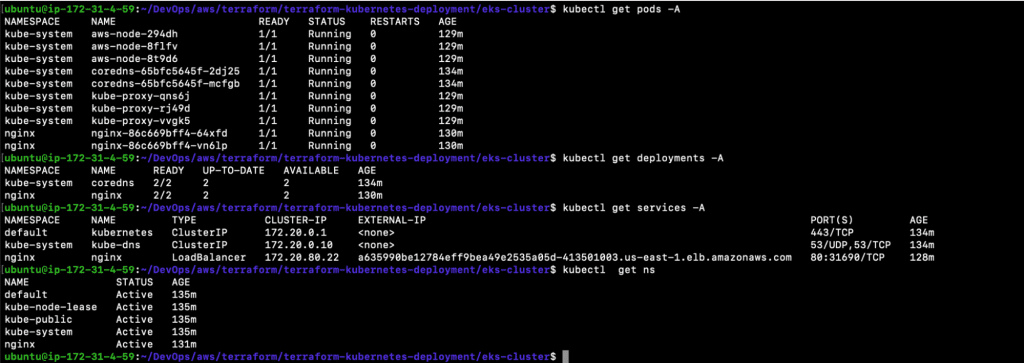

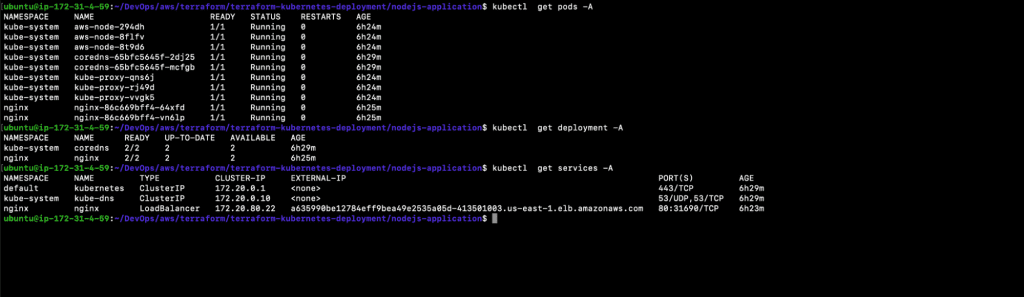

- Check the resources such as pods, deployments and services that are available in the Kubernetes Cluster across all namespaces.

21.1. kubectl get pods -A

21.2. kubectl get deployments -A

21.3. kubectl get services -A

21.4. kubectl get ns

In the screenshot above, you can see the Namespace, Pods, Deployment, and Service that we created with Terraform.

We have now attempted to create EKS Cluster using Terraform and deploy Nginx with Terraform. Now, let’s see if we can deploy a sample NodeJs application using Terraform in the same EKS Cluster. This time, we will keep the Kubernetes objects files in a separate folder so that the NodeJs application can be managed independently, allowing us to deploy and/or destroy our NodeJs application without affecting the EKS Cluster.

How to Deploy a Sample Nodejs Application on the EKS Cluster Using Terraform?

In this part of the article, we will deploy a sample NodeJs application and its dependent resources, including Namespace, Deployment, and Service. We have used the publicly available Docker Images for the sample NodeJs application and MongoDB database.

Now, let’s go ahead with the deployment.

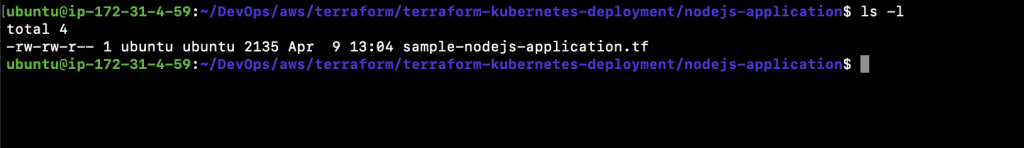

- Change your “Present Working Directory” to ~/DevOps/aws/terraform/terraform-kubernetes-deployment/nodejs-application/ if you have cloned my repository.

1.1. cd ~/DevOps/aws/terraform/terraform-kubernetes-deployment/nodejs-application/ - You should now have the required file.

- If you haven’t cloned the repo, feel free to create the required .tf file in a new folder. Please note that we are using a separate folder here.

- Create a sample-nodejs-application.tf file with the following content. In this case, we are using Terraform Kubernetes Provider to deploy a sample NodeJs application. We will be creating a Namespace, NodeJs Deployment and its Service of type LoadBalancer, and MongoDB Deployment, as well as its service for type.

| provider “kubernetes” { config_path = “~/.kube/config” } resource “kubernetes_namespace” “sample-nodejs” { metadata { name = “sample-nodejs” } } resource “kubernetes_deployment” “sample-nodejs” { metadata { name = “sample-nodejs” namespace = kubernetes_namespace.sample-nodejs.metadata.0.name } spec { replicas = 1 selector { match_labels = { app = “sample-nodejs” } } template { metadata { labels = { app = “sample-nodejs” } } spec { container { image = “learnk8s/knote-js:1.0.0” name = “sample-nodejs-container” port { container_port = 80 } env { name = “MONGO_URL” value = “mongodb://mongo:27017/dev” } } } } } } resource “kubernetes_service” “sample-nodejs” { metadata { name = “sample-nodejs” namespace = kubernetes_namespace.sample-nodejs.metadata.0.name } spec { selector = { app = kubernetes_deployment.sample-nodejs.spec.0.template.0.metadata.0.labels.app } type = “LoadBalancer” port { port = 80 target_port = 3000 } } } resource “kubernetes_deployment” “mongo” { metadata { name = “mongo” namespace = kubernetes_namespace.sample-nodejs.metadata.0.name } spec { replicas = 1 selector { match_labels = { app = “mongo” } } template { metadata { labels = { app = “mongo” } } spec { container { image = “mongo:3.6.17-xenial” name = “mongo-container” port { container_port = 27017 } } } } } } resource “kubernetes_service” “mongo” { metadata { name = “mongo” namespace = kubernetes_namespace.sample-nodejs.metadata.0.name } spec { selector = { app = kubernetes_deployment.mongo.spec.0.template.0.metadata.0.labels.app } type = “ClusterIP” port { port = 27017 target_port = 27017 } } } |

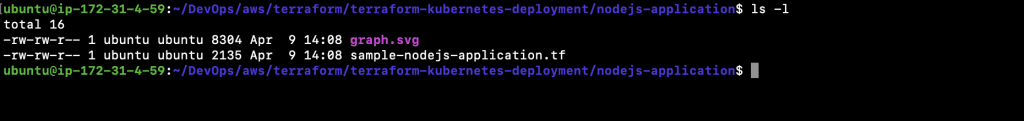

- At this point, you should have one file in your current directory, as depicted below.

5.1. sample-nodejs-application.tf

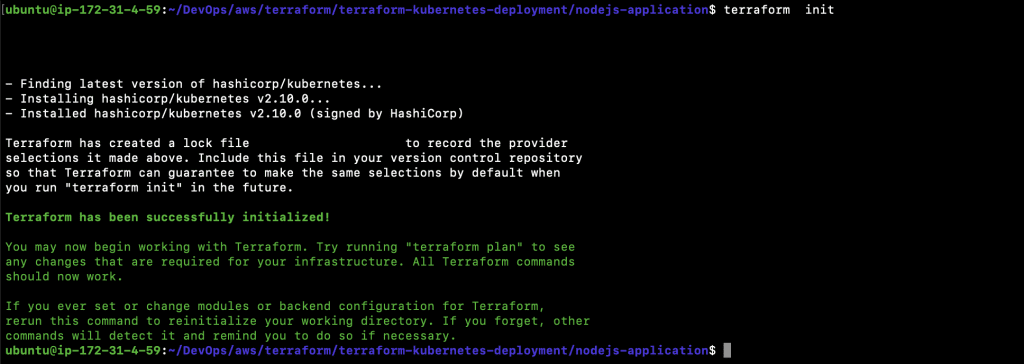

- To initialize the current working directory containing our Terraform configuration file (.tf file) and deploy a sample NodeJs application, execute the following command:

6.1. terraform init

- Next, execute the following command to determine the desired state of all the resources defined in the above .tf file.

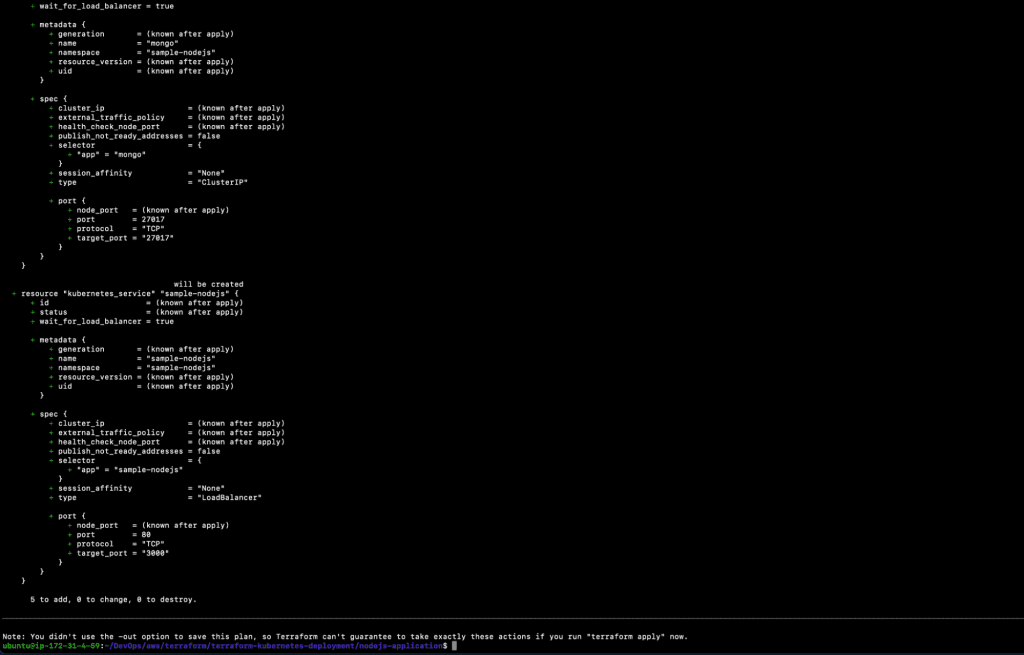

7.1. terraform plan

- Before we deploy a sample NodeJs application, let’s try generating a visual representation of our execution plan in the same way we did while creating the EKS Cluster. In this case, this is an optional step that you can skip if you don’t wish to see the graph. Once you execute the following command, you will get the output in a graph.svg file. You can try to open the file on your personal computer or online with SVG Viewer.

8.1. terraform graph -type plan | dot -Tsvg > graph.svg - The next step is to deploy the sample Nodejs application using the .tf files in our current working directory. Execute the following command, and this time, don’t go and have a cup of tea seeing as it should not take more than one minute to complete. Once the command is successfully completed, the sample NodeJs application will be deployed in the EKS Cluster using Terraform.

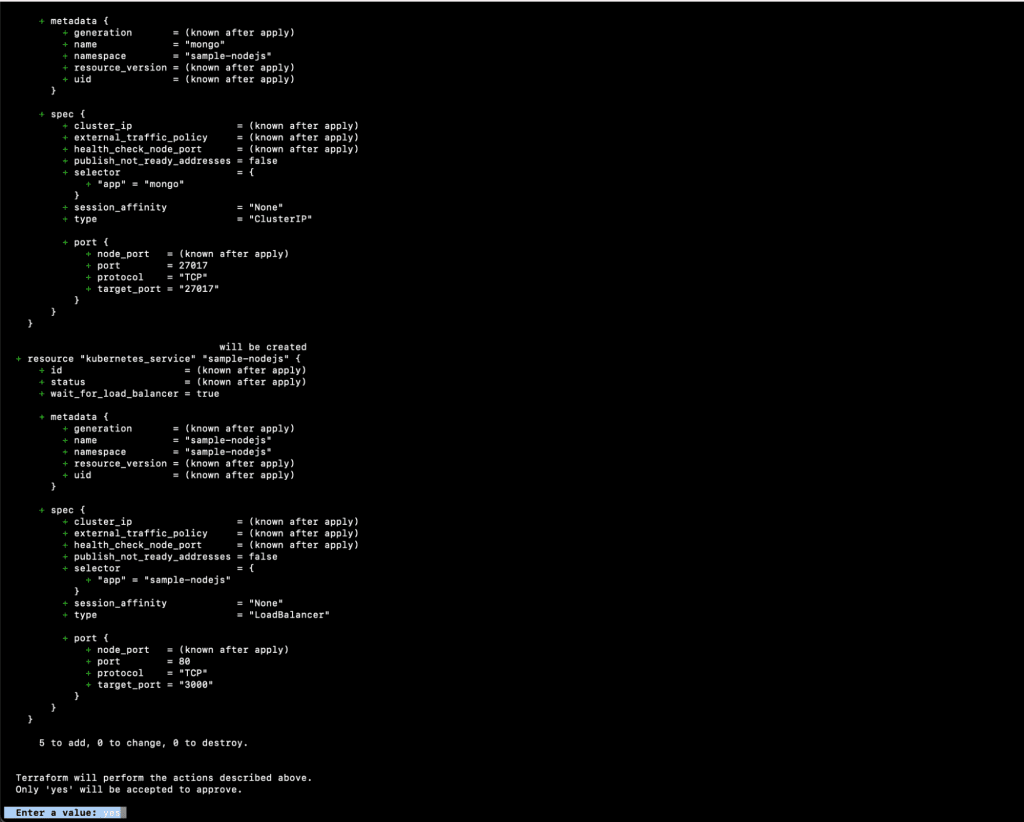

9.1. terraform apply

10. Once the “terraform apply” command is successfully completed, you should see the following output.

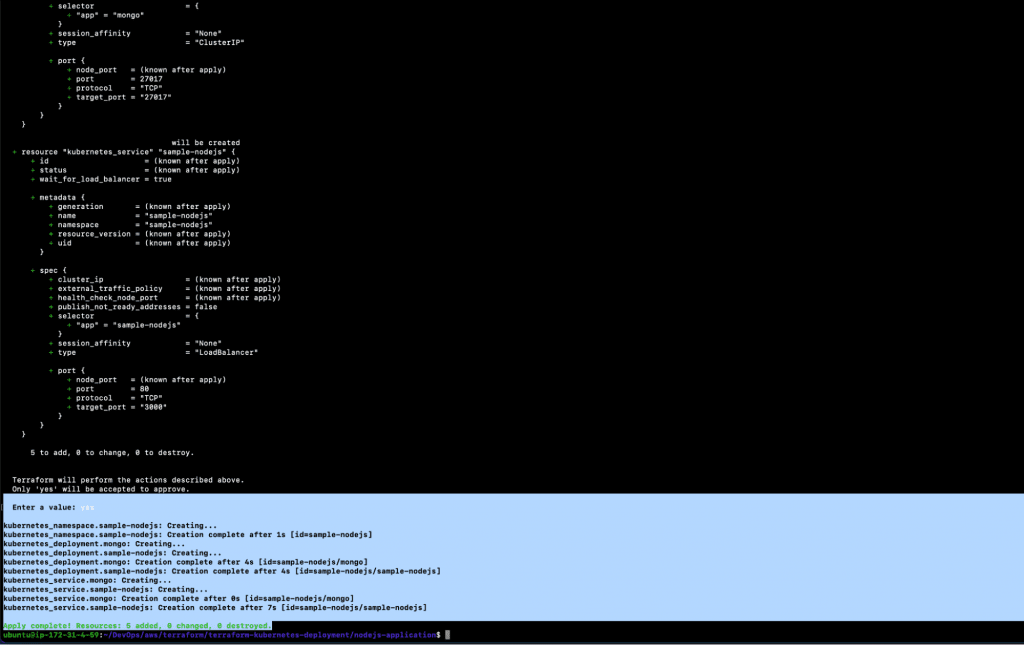

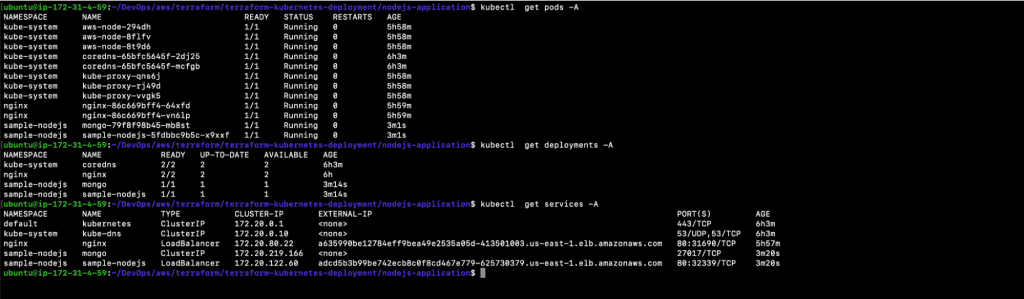

11. You can now verify the objects that have been created using the commands below.

11.1. kubectl get pods -A

11.2. kubectl get deployments -A

11.3. kubectl get services -A

In the above screenshot, you can see the Namespace, Pods, Deployment, and Service that were created for the sample NodeJs application.

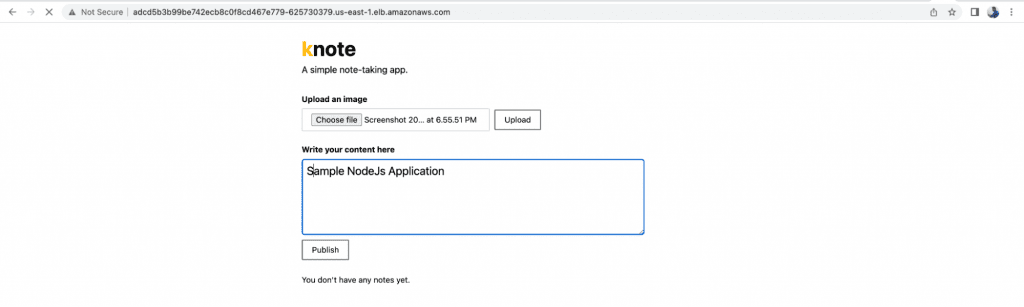

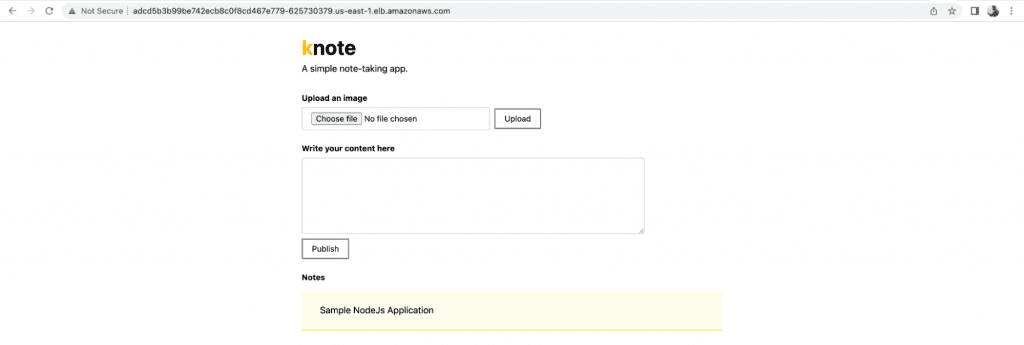

- You can also access the sample NodeJs application using the DNS of the LoadBalancer. Upload an image and add a note to it. Once you’ve published the image, it will be saved in the database.

It’s important to note that, in this case, we did not use any type of persistent storage for the application, therefore the data will not be retained after the pod is recreated or deleted. To retain data, try using PersistentVolume for the database.

We just deployed a sample NodeJs application that was publicly accessible over the LoadBalancer DNS using Terraform.

Next, we will complete the creation of the EKS Cluster and deployment of the sample NodeJs application using Terraform.

Cleanup the Resources we Created

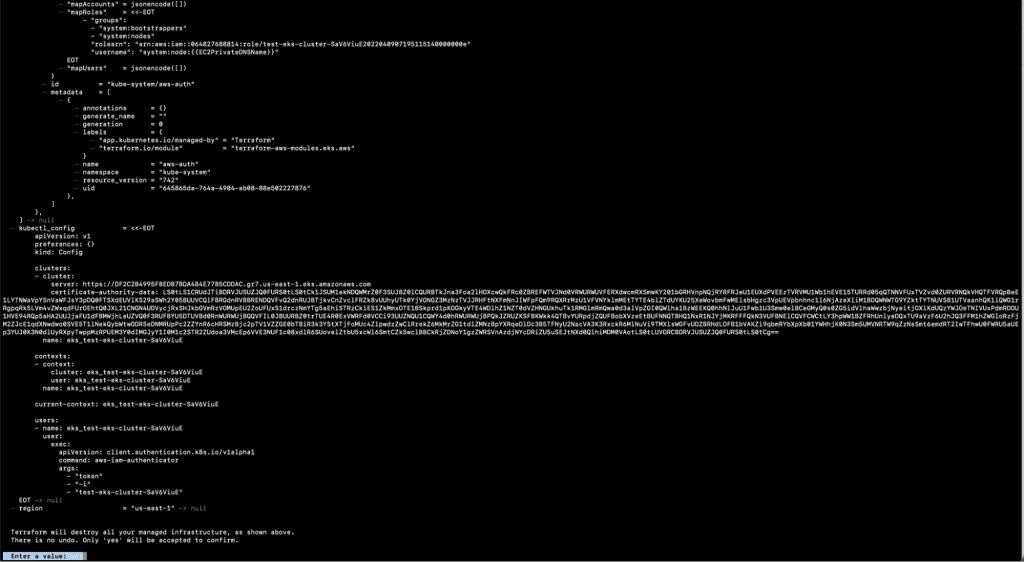

It’s always better to delete the resource once you’re done with the tests, seeing as this saves costs. To clean up the resources and delete the sample NodeJs application and EKS Cluster, follow the steps below.

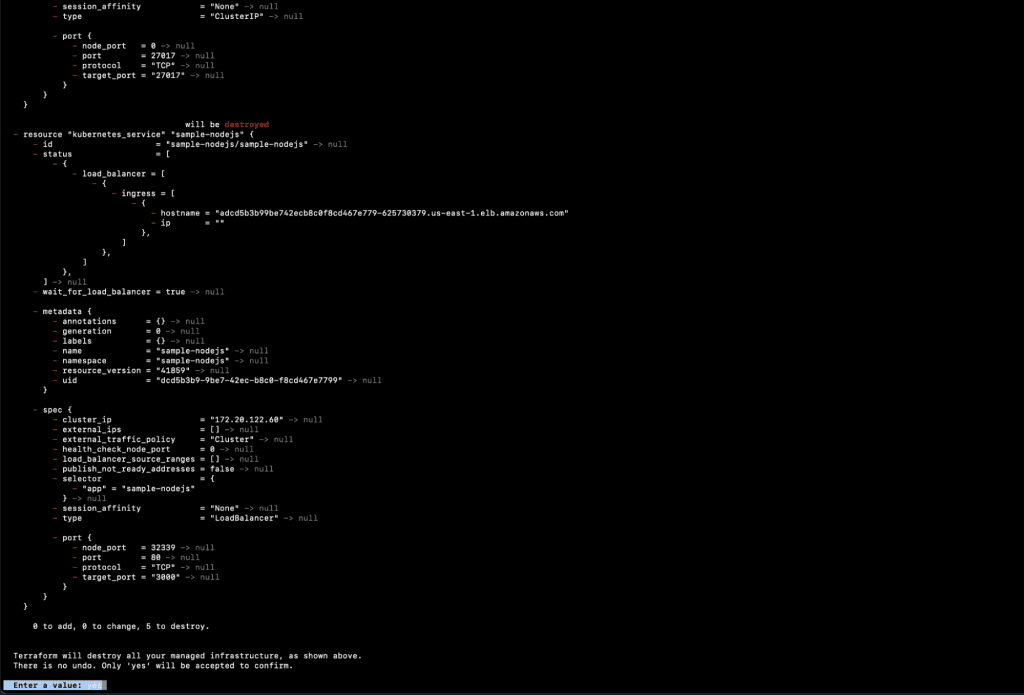

- Destroy the sample NodeJs application using the following command.

1.1. terraform init

Execute this command if you get this error: “Error: Inconsistent dependency lock file.”

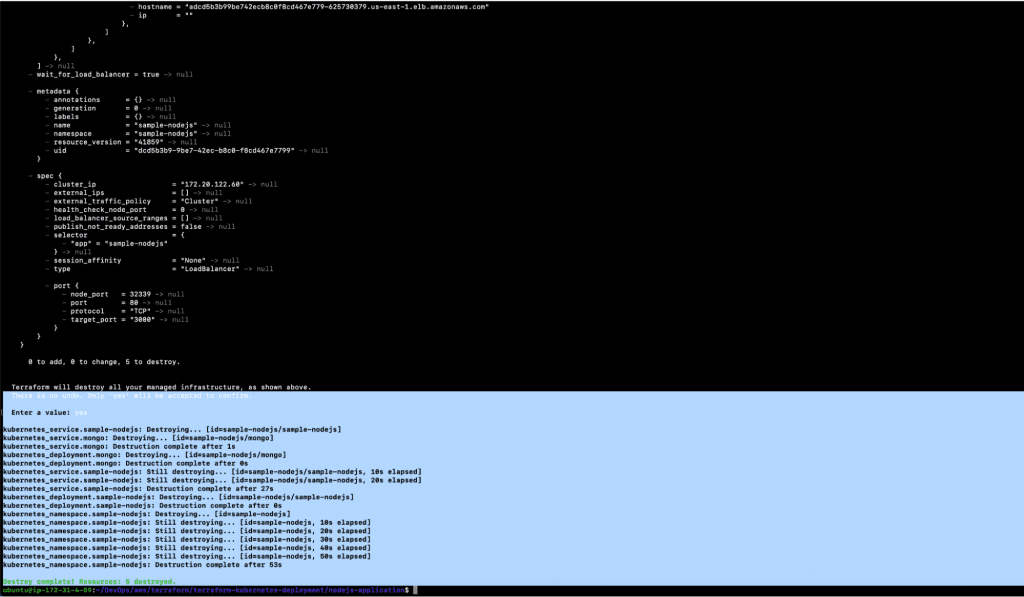

1.2. terraform destroy

- You should see the following output once the above command is successful.

- Validate whether or not the NodeJs application has been destroyed.

3.1. kubectl get pods -A

3.2. kubectl get deployment -A

3.3. kubectl get services -A

In the above screenshot you can see that all of the sample Nodejs application resources have been deleted.

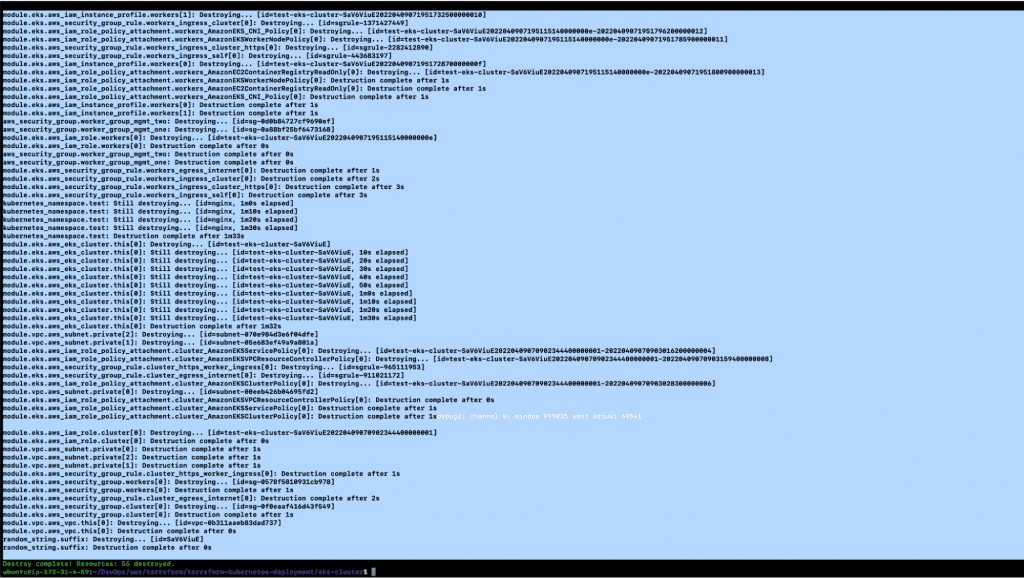

- Now, let’s destroy the EKS Cluster using the following command:

4.1. terraform init

Execute this command if you get the following error: “Error: Inconsistent dependency lock file.”

4.2. terraform destroy

5. You will see the following output once the above command is successful.

6. You can now go to the AWS console to verify whether or not the resources have been deleted.

There you have it! We have just successfully deleted the EKS Cluster, as well as the sample NodeJs application.

Conclusion of Terraform Kubernetes Deployment

Elastic Kubernetes Service (EKS) is a managed Kubernetes service provided by AWS, which takes the complexity and overhead out of provisioning and optimizing a Kubernetes Cluster for development teams. An EKS Cluster can be created using a variety of methods; nevertheless, using the best possible way is critical in improving the infrastructure management lifecycle.

Terraform is one of the Infrastructure as Code (IaC) tools that allows you to create, modify, and version control cloud and on-premise resources in a secure and efficient manner. You can use the Terraform Kubernetes Deployment method to create EKS Cluster using Terraform while automating the creation process of the EKS Cluster and having additional control over the entire infrastructure management process through code. The creation of the EKS Cluster and the deployment of Kubernetes objects can also be managed using Terraform Kubernetes Provider.

FAQs

You can definitely create an EKS Cluster from the AWS console, but what if you want to create the cluster for different environments such as Dev, QA, Staging, or Prod. To avoid human errors and maintain consistency across the different environments, it’s important to have an automation tool. Therefore, in such a case, it’s better to create EKS Cluster using Terraform.

Terraform has the advantage of being able to use the same configuration language for both provisioning the Kubernetes Cluster and deploying apps to it. Moreover, Terraform allows you to build, update, and delete pods and resources with only one command, thus eliminating the need to check APIs to identify resources.

Terraform keeps track of everything it creates or manages in a State File. This State File can be stored on your local machine or on remote storage such as S3 Bucket. State Files should be saved to remote storage so that everyone can work with the same state and actions can be performed on the same distant objects.