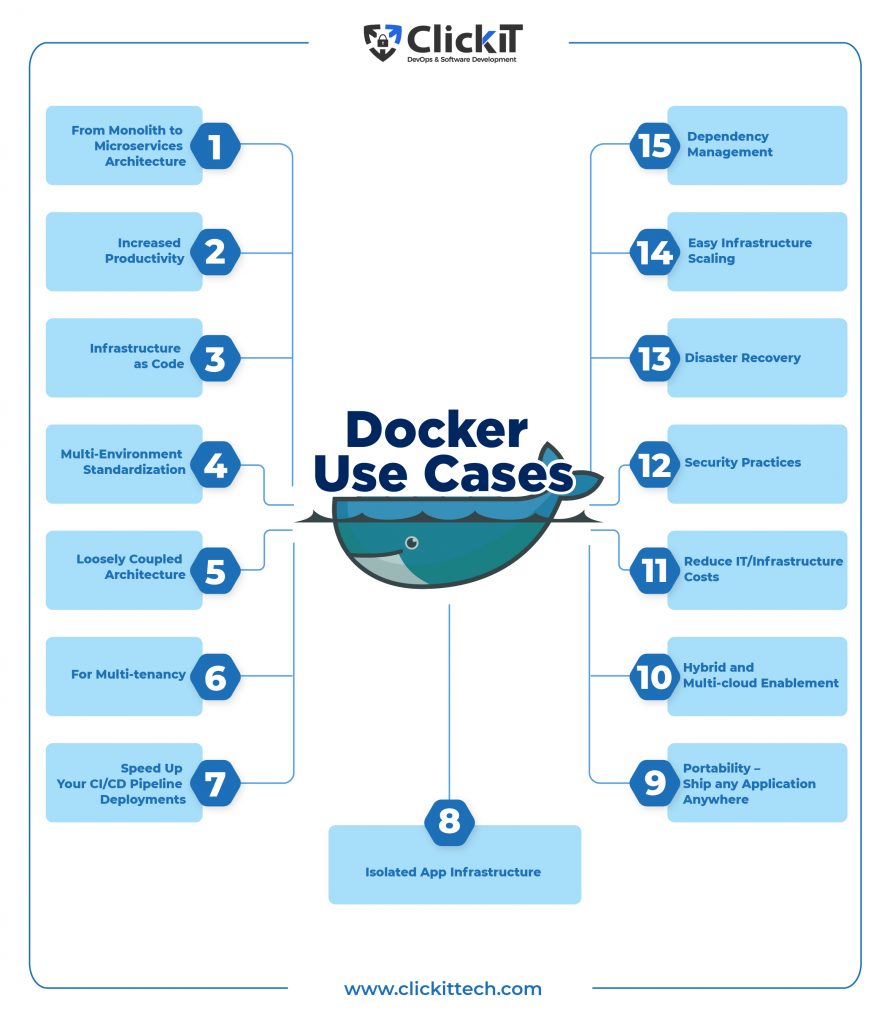

Docker is a containerization technology that enables developers to package applications with dependencies into standardized units for deployment across environments. Microservices architecture benefits greatly from Docker, allowing applications to be broken into independent, scalable services. This leads to increased productivity and the ability to treat infrastructure as code.

Everyday use cases include migrating monolithic apps, improving CI/CD pipelines, and enabling portability and security to reduce costs. Docker allows organizations to utilize container management systems like Kubernetes, Amazon EKS/ECS/Fargate, Azure Kubernetes Service, and Google Kubernetes Service for better application orchestration and management.

- Defining Docker, Microservices and Containers

- What are the benefits of using Docker?

- Docker Use Cases

- Container Management Systems

- FAQs

Defining Docker, Microservices, and Containers

Docker is a containerization technology that enables developers to package a service, along with its dependencies, libraries, and operating system, into a container. By separating the apps from the infrastructure, Docker allows you to deploy and move apps across a variety of environments seamlessly.

Docker makes it very simple to create and manage containers using the following steps:

- Create a Docker file and add the code

- Build a Docker image based on the Dockerfile

- Create a running instance from the Docker image

- Scale containers on-demand

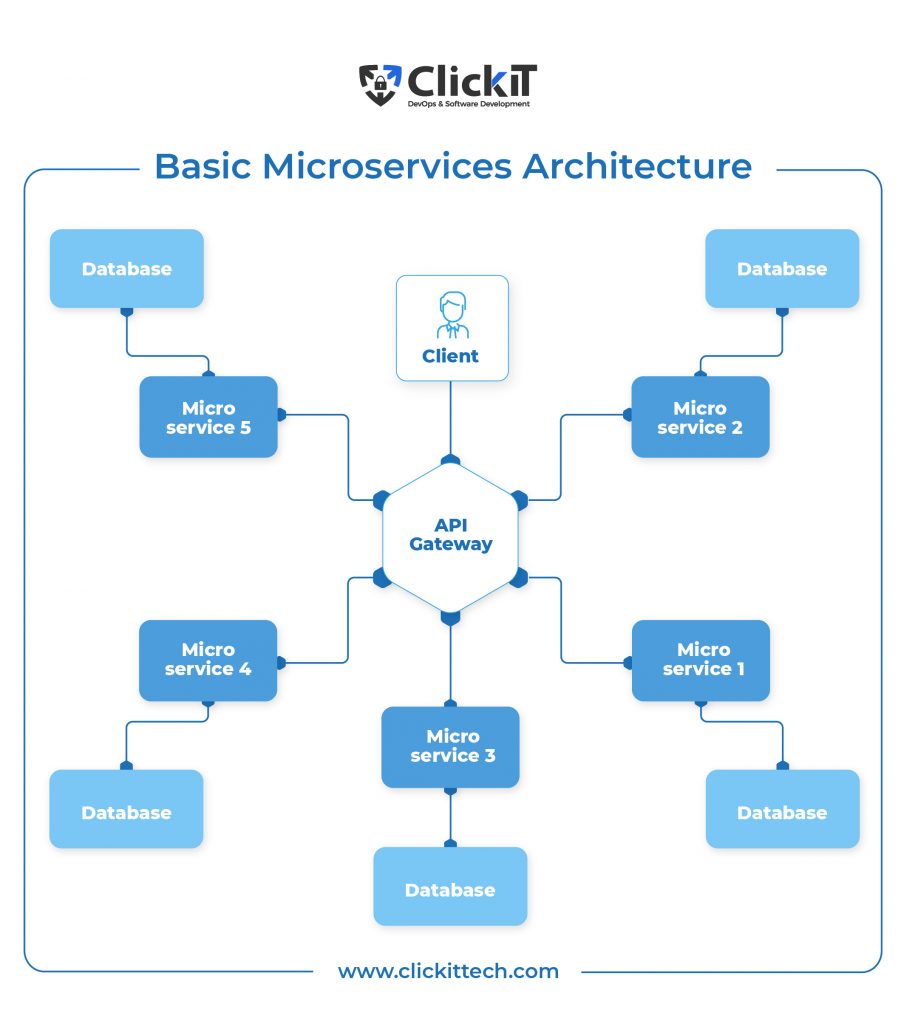

Microservices or microservices architecture addresses these challenges by allowing developers to break down an application into smaller independent units that communicate with each other using REST APIs. Typically, each function can be developed as an independent service, meaning each can function independently without affecting any other services.

Therefore, organizations can accelerate release cycles, scale operations on demand, and seamlessly change code without application downtime. A popular Docker use case is migrating from a monolithic architecture to microservices.

A container is a notable use case of microservices architecture. A container is a standard unit of software that isolates an application from its underlying infrastructure by packaging it with all dependencies and required resources.

Unlike virtual machines, which virtualize hardware layers, containers only virtualize software layers above the OS level. We will discuss container management and container management tools below.

What are the Benefits of Using Docker?

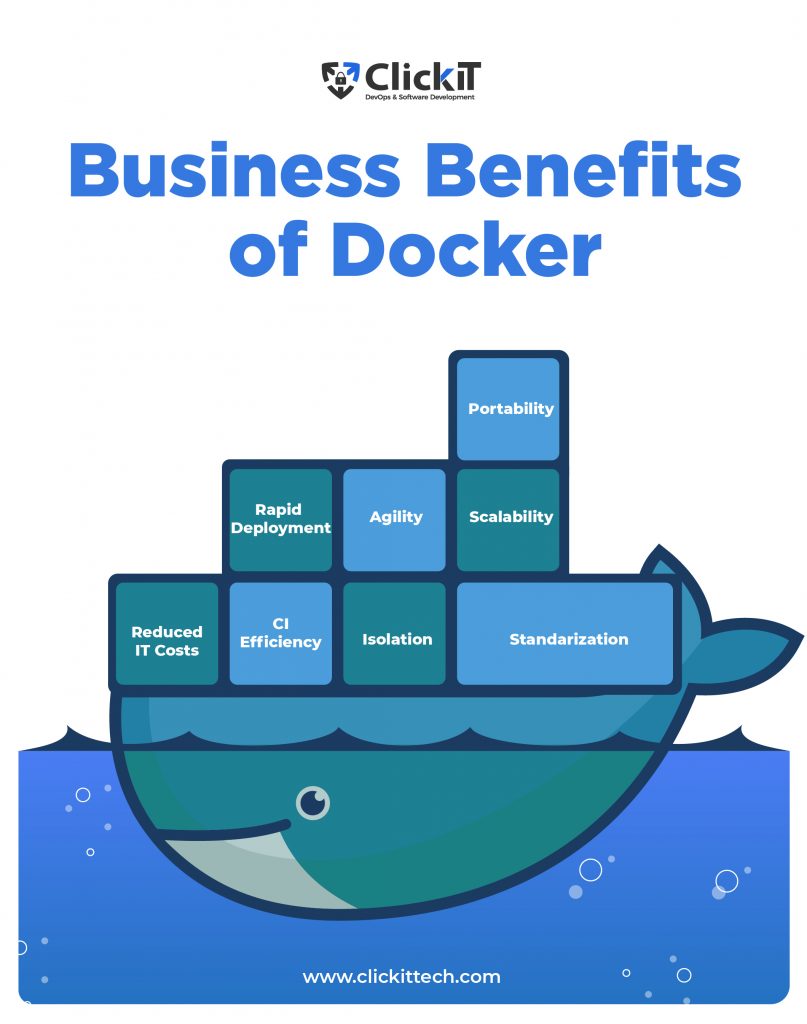

- Portability and ecosystem: All major cloud providers, such as AWS, GCP, and Azure, have incorporated Docker into their systems and provide support. Therefore, you can seamlessly run Docker containers on any environment, including VirtualBox, Rackspace, and OpenStack.

- Scalability: Organizations can significantly reduce operational costs by deploying multiple containers on a single host. Moreover, Docker allows you to deploy services on commodity hardware, thus eliminating the cost of purchasing expensive servers.

- Cost savings and more ROI: Docker’s underlying motto includes fewer resources and smaller engineering teams. Therefore, organizations can perform operations using fewer resources and require less staff to monitor and manage such operations.

- Faster deployments: Docker allows you to create and manage containers, instantly facilitating faster deployments. Its ability to deploy and scale infrastructure using a simple YAML config file makes it easy to use while offering a quicker time to market. Security is prioritized with each isolated container.

Docker Use Cases

Docker Use Cases 1: From Monolith to Microservices Architecture

Microservices simplify software by breaking applications into independent services that communicate via APIs. This architecture supports DevOps practices, enabling faster deployments and easier scaling.

AI-driven container orchestration now enhances microservices by optimizing workload distribution and automating resource management. Docker is key in this transition, ensuring consistency across development and production.

With AI-powered security scans and automated CI/CD pipelines, teams can reduce complexity, improve efficiency, and deploy more confidently. AI-based load balancing improves performance, ensuring microservices run smoothly with minimal manual intervention.

Docker Use Cases 2: Increased Productivity

Docker environments facilitate automated builds, tests, and Webhooks. This means you can easily integrate Bitbucket or GitHub repos with the development environment, create automatic builds from the source code, and move them into the Docker Repo. A connected workflow between developers and CI/CD tools also means faster releases.

Docker comes with a cloud-managed container registry, eliminating the need to manage your registry, which can get expensive when you scale the underlying infrastructure. Moreover, configuration complexity becomes a thing of the past. Implementing role-based access allows people across various teams to access Docker images securely.

Offering accelerated development, automated workflows, and seamless collaboration, there’s no doubt that Docker increases productivity.

Docker Use Cases 3: Infrastructure as Code

Infrastructure as Code (IaC) enables you to manage the infrastructure using code. It allows you to define the provisioning of resources for the infrastructure using config files and convert it into software, thereby taking advantage of best practices such as CI/CD processes, automation, reusability, and versioning.

Docker brings IaC into the development phase of the CI/CD pipeline. Developers can use Docker Compose to build composite apps using multiple services and ensure they work consistently across the pipeline. IaC is a typical example of a Docker use case.

Docker Use Cases 4: Multi-Environment Standardization

Docker provides a production parity environment for all its members across the pipeline. Consider an instance wherein a software development team is evolving. When a new member joins the team, each member has to install/update the operating system, database, node, yarn, etc. It can take 1-2 days to get the machines ready. Furthermore, ensuring everyone gets the same OS, program versions, database versions, node versions, code editor extensions,s, and configurations is challenging.

AI-powered configuration management further streamlines this process by detecting inconsistencies and automatically synchronizing environments across development, testing, and production. This prevents last-minute dependency issues and reduces onboarding time for new team members.

With Docker, teams achieve consistent, conflict-free environments, minimize setup delays, and ensure smooth CI/CD workflows.

Docker Use Cases 5: Loosely Coupled Architecture

Docker helps developers package each service into a container with the required resources, making it easy to deploy, move, and update it.

Telecom industries are leveraging the 5G technology and Docker’s support for software-defined network technology to build loosely coupled architectures. The new 5G technology supports network function virtualization, allowing telecoms to virtualize network appliance hardware. As such, they can divide and develop each network function into a service and package it into a container.

These containers can be installed on commodity hardware, allowing telecoms to eliminate the need for expensive hardware infrastructure and thus significantly reduce costs. The relatively recent entrance of public cloud providers into the telecom market has shrunk the profits of telecom operators and ISVs. They can now use Docker to build cost-effective public clouds with the existing infrastructure, turning Docker use cases into new revenue streams.

Docker Use Cases 6: For Multi-tenancy

Multi-tenancy is a cloud deployment model in which a single installed application serves multiple customers, with each customer’s data wholly isolated. SaaS apps mostly use this approach.

There are 4 common approaches to a multi-tenancy model:

- Shared database – Isolated Schema: All tenants’ data is stored in a single database in a separate schema for each tenant. The isolation is medium.

- Shared Database – Shared Schema: All tenants’ data is stored in a single database wherein a ” Foreign Key ” identifies each tenant’s data.” The isolation level is low.

- Isolated database—Shared App Server: The data related to each tenant is stored in a separate database with a high isolation level.

- Docker-based Isolated tenants: Each tenant’s data is stored in a separate database, and a new set of containers identifies each tenant.

While the tenant data is separated, all of these approaches use the same application server for all tenants. That said, Docker allows for complete isolation wherein each tenant app code runs inside its container for each tenant.

To do this, organizations can convert the app code into a Docker image to run containers and use docker-compose.yaml to define the configuration for multi-container and multi-tenant apps, thus enabling them to run containers for each tenant. Inside the container, a separate Postgres database and an app server will be used for each tenant. Each tenant will need 2 database servers and 2 app servers. Adding an NGINX server container can route your requests to the correct tenant container.

Docker Use Cases 7: Speed Up Your CI/CD Pipeline Deployments

Containers launch in seconds, enabling fast code deployments and quick updates in CI/CD pipelines. However, long build times can slow things down, especially when dependencies must be pulled each time.

Docker’s cache layer helps optimize builds, but for remote runners, using the --from-cache command can speed up execution by leveraging locally stored images.

AI-driven pipeline automation takes this further by analyzing past builds, detecting code changes, and optimizing execution order. AI prioritizes critical builds, runs tests in parallel, and reduces deployment time, making CI/CD pipelines faster and smarter.

Docker Use Cases 8: Isolated App Infrastructure

One of Docker’s key advantages is its isolated application infrastructure. Each container is packaged with all dependencies, so you don’t need to worry about dependency conflicts. You can quickly deploy and run multiple applications on one or multiple machines, regardless of the OS, platform and app version. Consider an instance wherein two servers use different versions of the same application. By running these servers in independent containers, you can eliminate dependency issues.

Docker also offers an SSH server for automation and debugging for each isolated container. Since each service/daemon is isolated, it’s easy to monitor applications and resources running inside the isolated container and quickly identify errors. This allows you to run an immutable infrastructure, minimizing any downtime from infrastructure changes.

Docker Use Cases 9: Portability – Ship any Application Anywhere

Docker ensures seamless portability by packaging applications with all dependencies, allowing them to run consistently across environments.

Unlike traditional deployments, Docker containers eliminate OS-specific issues by using the host machine’s OS kernel. This makes them lightweight and easy to move between development, testing, and production without significant reconfiguration.

Now, AI-powered deployment tools further enhance portability by automatically adapting containerized applications for different environments, detecting compatibility issues, and optimizing configurations. This ensures faster, error-free deployments across cloud, on-prem, and hybrid infrastructures.

Docker Use Cases 10: Hybrid and Multi-cloud Enablement

According to Channel Insider, the top three drivers of Docker adoption in organizations are hybrid clouds, VMware costs and pressure from testing teams. Although hybrid clouds are flexible and allow you to run customized solutions, distributing the load across multiple environments can be challenging. Cloud providers must compromise on costs or feature sets to facilitate seamless movement between clouds.

Docker eliminates these interoperability issues because its containers run similarly in both on-premise and cloud deployments. You can seamlessly move them between testing and production environments or internal clouds built using multiple cloud vendor offerings. Also, the complexity of deployment processes is reduced.

Thanks to Docker, organizations can build hybrid and multi-cloud environments comprising two or more public/private clouds from different vendors. Migrating from AWS to the Azure cloud is easy. Plus, you can select services and distribute them across different clouds based on security protocols and service-level agreements.

Docker Use Cases 11: Reduce IT/Infrastructure Costs

Docker allows you to provision fewer resources, enabling you to run more apps and facilitating efficient resource optimization. For example, developer teams can consolidate resources onto a single server, thus reducing storage costs.

Docker comes with high scalability, allowing you to provision required resources for a precise moment and automatically scale the infrastructure on demand. You only pay for the resources you use. Moreover, apps running inside Docker deliver the same level of performance across the CI/CD pipeline, from development to testing, staging, and production. As such, bugs and errors are minimized.

This environment parity enables organizations to manage the infrastructure with minimal staff and technical resources, considerably reducing maintenance costs.

Docker enhances productivity, so you don’t need to hire as many developers as you would in a traditional software development environment.

Docker Use Cases 12: Security Practices

Docker containers are isolated by default, preventing unauthorized access between containers and limiting network privileges. This built-in security ensures each container operates independently unless explicitly configured otherwise.

AI-powered security tools now strengthen container defenses by detecting vulnerabilities, monitoring runtime behavior, and preventing DDoS attacks through automated resource throttling. AI also helps enforce compliance by scanning Docker images for misconfigurations and security risks before deployment.

Using signed images and least-privilege access controls minimizes threats, while security frameworks like SELinux, AppArmor, and GRSEC offer additional protection. AI-driven anomaly detection now provides real-time monitoring, instantly flagging suspicious activity and more effectively securing Docker environments.

Docker Use Cases 13: Disaster Recovery

Ensuring business continuity in hybrid and multi-cloud environments requires resilience and rapid failure recovery. Docker simplifies disaster recovery by enabling fast container recreation and deployment, minimizing downtime.

AI-driven monitoring and self-healing mechanisms now enhance this process by detecting failures in real-time and automatically restarting or reallocating containers. Predictive analytics also help identify potential failures before they occur, allowing proactive mitigation.

A robust disaster recovery plan for critical applications should include backup container images, automated failover strategies, and redundancy across multiple hosts to ensure seamless recovery with minimal disruption.

Docker Use Cases 14: Easy Infrastructure Scaling

Docker augments the microservices architecture, wherein applications are broken down into independent services and packaged into containers. Organizations are using microservices and cloud architectures to build distributed applications.

Docker lets you instantly spin up identical containers for an application and horizontally scale the infrastructure. As the number of containers increases, you must use a container orchestration tool such as Kubernetes or Docker Swarm.

These tools come with brilliant scaling abilities that automatically scale up the infrastructure on-demand. They also help you optimize costs by removing the need to run unnecessary containers. It’s important to fine-grain components to make orchestration easier. In addition, stateless and disposable components will enable you to monitor and manage the container’s lifecycle easily.

Docker Use Cases 15: Dependency Management

Managing dependencies across applications and environments can be complex, but Docker eliminates conflicts by packaging everything an app needs into a container. This ensures consistent behavior, regardless of OS or environment.

Now, AI-powered dependency resolution further streamlines this process by automatically detecting and resolving version conflicts across microservices. AI-driven tools can also analyze container configurations and suggest optimizations to improve compatibility and performance.

With Docker, teams avoid manual dependency tracking, ensuring seamless deployments across machines while reducing errors and setup time.

Companies Powered by Docker

Paypal

The company processes around 200 payments per second across three different systems: Paypal, Venmo, and Braintree. Moving services between different clouds and architectures delays deployment and maintenance tasks.

Paypal, therefore, implemented Docker and standardized its apps and operations across the infrastructure. To this day, the company has migrated 700 apps to Docker and works with 4000 software employees, managing 200,000 containers and 8+ billion transactions per year while achieving a 50% increase in productivity.

Adobe

also uses Docker for containerization tasks. For instance, ColdFusion is an Adobe web programming language and application server that facilitates communication between web apps and backend systems. Adobe uses Docker to containerize and deploy ColdFusion services. It uses Docker Hub and Amazon Elastic Container Registry to host Docker images. Users can, therefore, pull these images to the local machine and run Docker commands.

GE

GE was one of the few companies bold enough to embrace the technology at its embryonic stage and has become a leader. As such, the company operates multiple legacy apps, which delay the deployment cycle. GE turned to Docker and has since considerably reduced development to deployment time. Moreover, it can achieve higher application density than VMs, reducing operational costs.

Legacy Application Modernization: Read the full blog here.

Container Management Systems

As organizations grow, managing containerized workloads at scale becomes complex. Container management systems simplify this by automating deployment, scaling, and maintenance.

These systems handle orchestration, security, monitoring, storage, and networking, ensuring efficient resource utilization. AI-powered orchestration now enhances this by predicting workload demands, optimizing container placement, and automating failure recovery.

With smarter automation and self-healing capabilities, businesses can scale seamlessly while maintaining performance, security, and cost efficiency.

Popular Container Management Tools

Here are some of the most popular container managers for your business.

- Kubernetes: Kubernetes is the most popular container management tool developed by Google. It wasn’t long before Kubernetes became a de facto container management and orchestration standard. Google moved the tool to Cloud Native Computing Foundation (CNCF), which means the tool is now supported by industry giants such as IBM, Microsoft, Google andRedHat. It enables you to quickly package, test, deploy and manage large clusters of containers with ease. It’s also open-source, cost-effective and cloud-agnostic.

- Amazon EKS: As Kubernetes became a standard for container management cloud providers started incorporating it into their platform offerings. Amazon Elastic Kubernetes Service (EKS) is a managed Kubernetes service for managing Kubernetes on AWS. With EKS organizations don’t need to install and configure Kubernetes work nodes or planes seeing as it handles that for you. In a nutshell, EKS acts as a container service and manages container orchestration for you. However, EKS only works with AWS cloud.

- Amazon ECS: Amazon Elastic Container Service (ECS) is a fully managed container management tool for AWS environments that helps organizations manage microservices and batch jobs with ease. ECS looks similar to EKS but differs because it manages container clusters, unlike EKS, which only performs Kubernetes tasks. ECS is free while EKS charges $0.1 per hour. That said, seeing as it’s open-source, EKS provides you with more support from the community. ECS, on the other hand, is more of a proprietary tool. ECS is primarily useful for people who don’t have extensive DevOps resources or who find Kubernetes to be complex.

Also read: Amazon ECS vs EKS - Amazon Fargate: Amazon Fargate is another container management as a serverless container service that enables organizations to run virtual machines without managing servers or container clusters. It’s actually a part of ECS but it also works with EKS. While ECS offers better control over infrastructure, it has some management complexities. If you want to run specific tasks without worrying about infrastructure management, we recommend Fargate.

- Azure Kubernetes Service: Azure Kubernetes Service (AKS) is a container management tool that is a fully managed Kubernetes service offered by Microsoft for Azure environments. It’s open-source and mostly free, as you only pay for the associated resources. AKS is integrated with the Azure Active Directory (AD) and offers a higher security level with role-based access controls. It seamlessly integrates with Microsoft solutions and is easily managed using Azure CLI or the Azure portal.

- Google Kubernetes Service: Google Kubernetes Engine (GKE) is a Kubernetes-managed service developed by Google in 2015 to manage Google compute engine instances running Kubernetes. GKE was the first ever Kubernetes-managed service, followed by AKS and EKS. GKE offers more features and automation than its competitors. Google charges $0.15 per hour per cluster.

In today’s complex software development environments comprising multiple operating systems, programming languages, plugins, frameworks, container management, and architectures, Docker creates a standardized workflow environment for every member throughout the product life cycle. More importantly, Docker is open-source and supported by a strong and vibrant community available to help you with any issues. Failing to leverage Docker use cases successfully will keep you behind your competitors.

This blog is available on DZone

FAQs

Redis, NGINX and Postgres are the three most widely used technologies running inside Docker containers. Other technologies include MySQL, MongoDB, ElasticSearch, RabbitMQ and HAProxy.

Docker technology is highly scalable. You can easily scale the infrastructure to millions of containers. Google and Twitter easily deploy millions of containers using Docker.

Yes, Docker supports macOS and Windows platforms in addition to Linux.

Docker makes it easy for developers to quickly create, deploy, and manage apps on any platform. Moreover, it optimizes resources at its core, allowing you to deploy more apps on the same hardware than with any of its counterparts.

Docker plays a key role in AI/ML development by providing a consistent environment for running experiments and deploying models.

Data scientists use Docker to encapsulate machine learning frameworks and libraries so that models run reliably on any machine, from laptops to cloud servers. This consistency simplifies managing complex dependencies (e.g. specific Python packages or GPU drivers) and ensures reproducible results. Docker enables easy sharing of ML environments and collaboration on models colleagues can pull the same container image and get an identical setup. By containerizing ML workflows, teams avoid the “it works on my machine” issue, making it easier to move from model development to production deployment in a reliable way.