At this point, if AI isn’t part of your application, you’re falling behind in a world that expects speed, personalization, and smarter interactions. So, let me explain how to integrate AI into a React application. I will guide the integration of AI, discussing cloud-based APIs from providers like OpenAI and Google and on-device AI options using libraries such as TensorFlow.js. I will also cover essential steps for setting up a React AI integration, using relevant libraries, and structuring the project effectively.

There are already many benefits of React.js when building interactive UIs and functional apps, but adding AI can make them even more user-centric. Features like intelligent chatbots, AI search, and predictive analytics help users find what they need faster and get things done with less effort.

Whether you’re working on SPAs, dashboards, e-commerce sites, or productivity tools, integrating AI into your React app isn’t just a nice-to-have; it makes good apps great!

Furthermore, I will address critical aspects of optimizing AI performance in React apps, including caching, smarter API calls, and Web Workers, alongside vital considerations for security, data privacy, bias auditing, and suitable deployment platforms for AI-powered React applications, so let me explain how does React AI integration works.

- Choosing the Right AI Tools

- Setting Up Your React Project

- Using AI APIs in React (Cloud-Based AI)

- Implementing On-Device AI with TensorFlow.js

- How to Optimize AI Performance in React

- Security & Deployment

- Protecting API Keys & User Data

- FAQs

Choosing the Right AI Tools

Integrating AI doesn’t necessarily mean reinventing the wheel. More often than not, my goal isn’t to build a model from scratch and bring existing AI tools into my React app. This could be through natural language processing, image recognition, or predictive analytics.

Cloud AI APIs

If you need AI-powered features without setting up complex infrastructure or training and hosting models yourself, cloud APIs are the way to go. These services handle all the heavy processing on their end with just a few API calls.

- OpenAI API: The same tech behind ChatGPT, it’s great for adding conversational AI, text summarization, and code generation. If you want an AI-powered chatbot or smart auto-complete, this is your go-to. However, to use this API, you would need to have credits on your API account, if not the API can throw an error.

- Google Cloud AI: Offers tools for everything from speech recognition to computer vision. I would say it’s one of the best if you need to analyze images or transcribe audio in real-time.

- Hugging Face: A hub for pre-trained AI models, perfect if you need NLP, text classification, or translation without training a model yourself.

On-Device AI: Speed and Privacy Right in the Browser

Sometimes, running AI directly in the browser is the better option, especially for real-time processing, privacy concerns, or when you don’t want to rely on an internet connection.

- TensorFlow.js: This brings machine learning straight to the front end, letting you run AI models directly in the browser. It’s great for instant image recognition in a React app or predictive text suggestions without a backend dependency.

- ONNX.js : If you have a pre-trained AI model and need to integrate it into a React app without hammering your servers, ONNX.js could make it easy to execute ML models in the browser.

- Brain.js: A lightweight JS library for neural networks, useful for things like predicting user behavior or identifying patterns in data right inside your React app.

Setting Up Your React Project

Before diving headfirst into React AI integration, you need a solid React setup. If you haven’t created a React app yet, Create React App (CRA) and Vite are two of the easiest ways to get started. I prefer Vite because it’s lightweight and doesn’t make me wait five minutes every time I start a project.

To set it up:

npm create vite@latest react-ai-app --template react

cd react-ai-app

npm install

npm run dev

If you’re sticking with CRA, you can use:

npx create-react-app react-ai-app

cd react-ai-app

npm start

Next, you’ll want to install the right libraries depending on your desired AI functionality. I’ll run through the essentials below:

- Axios – If you’re calling cloud AI APIs like OpenAI, Google Cloud AI, or Hugging Face, you’ll need functions to handle API requests. Use: npm install axios

- OpenAI SDK – If you’re using OpenAI’s API (for chatbots, text generation, etc.). Use: npm install openai

- TensorFlow.js – If you want AI running directly in the browser. Use: npm install @tensorflow/tfjs

- Hugging Face Transformers – For integrating NLP models like BERT and GPT-2. Use: npm install @huggingface/transformers

Project Structure and Environment Setup

A well-structured project makes your life easier as your AI features grow. Here’s a simple setup that I recommend:

react-ai-app/

│── src/

│ │── components/ # Reusable UI components

│ │── services/ # API calls and AI logic

│ │── hooks/ # Custom hooks (if needed)

│ │── pages/ # Different app pages

│ │── assets/ # Static files (images, etc.)

│ │── App.js # Main component

│── public/

│── .env # API keys and environment variables

│── package.json

Now that your project is set up and dependencies installed, we’re ready to get our hands dirty on how to integrate AI into a React application.

Build Smart Apps! Partner with ClickIT’s React & AI team

Using AI APIs in React (Cloud-Based AI)

As I mentioned earlier, you don’t have to reinvent the wheel. Plus, not all AI needs to run on your app’s front end. Cloud-based AI services handle the heavy lifting processing natural language, analyzing images, and generating intelligent responses. This means that we focus on integrating these features into our React app.

Choosing a Cloud AI Service

There are plenty of AI APIs out there, but here are some of the most useful for React applications:

- OpenAI API – Best for chatbots, text generation, and natural language processing.

- Google Cloud AI – Offers speech recognition, vision AI, and machine learning models.

- IBM Watson – A strong choice for enterprise-grade AI, including sentiment analysis and chatbots.

For this example, I’ll integrate OpenAI’s API to build an AI-powered chatbot in a React app.

First, install the OpenAI SDK and Axios (for making API calls):

npm install openai axios

Here’s how you can call OpenAI’s GPT model to generate chatbot responses.

import { useState } from "react";

import axios from "axios";

const Chatbot = () => {

const [input, setInput] = useState("");

const [messages, setMessages] = useState([]);

const sendMessage = async () => {

if (!input.trim()) return;

const userMessage = { role: "user", content: input };

const updatedMessages = [...messages, userMessage];

setMessages(updatedMessages);

try {

const response = await axios.post(

"https://api.openai.com/v1/chat/completions",

{

model: "gpt-3.5-turbo",

messages: updatedMessages,

},

{

headers: {

Authorization: `Bearer ${process.env.REACT_APP_OPENAI_API_KEY}`,

"Content-Type": "application/json",

},

}

);

const botMessage = { role: "assistant", content: response.data.choices[0].message.content };

setMessages([...updatedMessages, botMessage]);

} catch (error) {

console.error("Error calling OpenAI API:", error);

}

setInput("");

};

return (

<div>

<div>

{messages.map((msg, index) => (

<p key={index} style={{ color: msg.role === "user" ? "blue" : "green" }}>

{msg.content}

</p>

))}

</div>

<input value={input} onChange={(e) => setInput(e.target.value)} />

<button onClick={sendMessage}>Send</button>

</div>

);

};

export default Chatbot;

Finally, I also recommend keeping sensitive API keys out of your codebase by storing them in a .env file at the root of your project.

That should look like:

REACT_APP_OPENAI_API_KEY=your_api_key_here

Then, restart your development server (npm run dev for Vite, npm start for CRA), and access the key in your code like this:

const apiKey = process.env.REACT_APP_OPENAI_API_KEY;

Another tip I swear by is to use the same Node.js and React versions in development and production to avoid compatibility issues.

Read: How to Integrate AI Into An App: Full Guide 2025

Implementing On-Device AI with TensorFlow.js

So far, we’ve looked at cloud-based AI, but what if you want AI running directly in the browser without sending data to an external server? That’s where TensorFlow.js comes in.

What is TensorFlow.js?

TensorFlow is Google’s end-to-end machine learning platform, designed to handle everything from data preparation to deploying AI models in production. Traditionally, it’s a Python-based framework, heavily used for deep learning models like neural networks.

But Python doesn’t run natively in the browser the way JavaScript does. That’s why Google created TensorFlow.js, a JavaScript library that lets you:

- Run AI models in the browser (no backend needed).

- Use pre-trained models for things like image recognition and text processing.

- Train new models directly in the client-side app.

So, when I integrate TensorFlow.js into a React app, I can basically process AI tasks instantly without network delays, making it great for real-time applications like image recognition, gesture detection, and predictive text.

Let me simplify it even more:

When to use TensorFlow.js (On Device) to Integrate AI into a React Application:

- If you need low-latency AI (e.g., real-time object detection).

- If you want to process data locally for privacy reasons.

- If you need AI features to work offline.

When to use Cloud AI (Backend) to Integrate AI into a React Application:

- If your AI model is too large for the browser.

- If you need high computational power (e.g., training complex models).

- If your app requires AI-generated responses based on external data sources.

Now, let me try to build an image recognition feature in React using TensorFlow.js and a pre-trained MobileNet model.

npm install @tensorflow/tfjs @tensorflow-models/mobilenet

Then

import React, { useState, useRef } from "react";

import * as mobilenet from "@tensorflow-models/mobilenet";

import "@tensorflow/tfjs";

const ImageRecognition = () => {

const [prediction, setPrediction] = useState(null);

const imageRef = useRef(null);

const classifyImage = async () => {

if (!imageRef.current) return;

const model = await mobilenet.load();

const results = await model.classify(imageRef.current)

setPrediction(results[0]); // Get the top prediction

};

return (

<div>

<h2>AI Image Recognition</h2>

<input

type="file"

accept="image/*"

onChange={(e) => (imageRef.current.src = URL.createObjectURL(e.target.files[0]))}

/>

<img ref={imageRef} alt="Upload" width="300" />

<button onClick={classifyImage}>Analyze Image</button>

{prediction && <p>Prediction: {prediction.className} (Confidence: {(prediction.probability * 100).toFixed(2)}%)</p>}

</div>

);

};

export default ImageRecognition;

Moreover, where you host your React app depends on your AI setup. Let me break it down:

Frontend-only with API Calls

If your app is all about static content and API calls, I’d lean toward Vercel, Netlify, or Firebase Hosting. They’re built to handle static sites with ease.

Backend AI Processing

When your AI work happens on the server, platforms like Render, AWS, or even a Node.js server on DigitalOcean are solid choices. They give you the flexibility and control you might need.

On-device AI Models

If you’re running AI models directly in the browser (like with TensorFlow.js), just make sure your hosting supports WebAssembly and service workers for caching.

Let me also say that while TensorFlow.js is great for real-time AI applications, for more powerful models, you may still want to rely on cloud-based AI. Next, we’ll look at optimizing AI performance in React apps.

Hire ClickIT’s React development and AI integration expertise to fuel your app’s success.

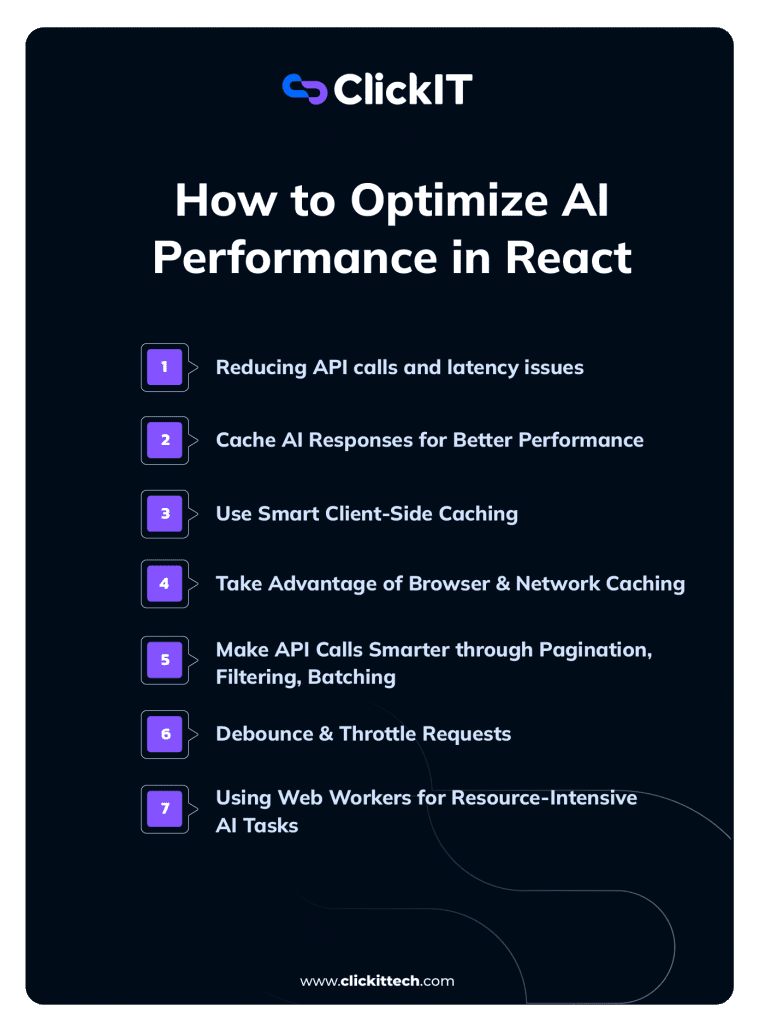

How to Optimize AI Performance in React

At this point, you’ll agree that React AI integration will take your app to the next level. Unfortunately, these new features may introduce slowdowns like latency from API calls, heavy computations freezing the UI, and unnecessary data re-fetching. So, I’ll quickly go over how to integrate AI into a React application without turning your app into a laggy mess.

Reducing API calls and latency issues.

AI APIs aren’t cheap or instant. Every request uses time and resources, and if the requests are unnecessary, they waste valuable computing power and bandwidth. How do you fix this? I recommend that you reduce, reuse, and optimize. Let me break that down.

Cache AI Responses for Better Performance

Firstly, repeatedly fetching the same AI-generated responses can be inefficient, especially when real-time updates aren’t unnecessary. For example, if I’m building an AI-powered e-commerce recommendation system, there’s no need to query the AI API every time a user refreshes the page.

Rather, do the following:

Use Smart Client-Side Caching

- React Query (TanStack Query): Automatically caches API responses and refreshes data only when needed. Fine-tune caching with staleTime and cacheTime.

- Apollo Client (for GraphQL apps): Built-in caching avoids redundant requests by normalizing and storing query results.

- Local Storage & IndexedDB: Store AI results persistently across sessions when long-term reuse is required.

Take Advantage of Browser & Network Caching

- Take advantage of HTTP Caching (ETag, Cache-Control): I’ll also recommend configuring API responses to be stored by the browser, reducing unnecessary network requests.

- Use Service Workers: Store AI responses offline for Progressive Web Apps (PWAs) or scenarios where intermittent connectivity is a concern.

Make API Calls Smarter through Pagination, Filtering, Batching

Secondly, pulling large datasets in just a single API call is a bad idea because it increases payload size and latency. What you want to do is:

- Use Pagination: Fetch only the data needed. Here, you want to use the limit & offset parameters.

- Filter on the Server Side: Only request relevant AI results using server-side filtering to reduce the volume of fetched data.

- Batch API Calls: I’ll say if multiple AI calls are needed, combine them into one (GraphQL or custom batch endpoints). So, rather than making multiple small API calls, this strategy allows you to combine them into a single batch request whenever possible. This will reduce the total number of HTTP connections and will also speed up data retrieval.

Debounce & Throttle Requests

Additionally, if the AI feature reacts to user input (for example, search or autocomplete), don’t let it trigger excessive requests on every keystroke.

What you should do here is to implement debouncing and throttling. Here’s what I mean:

- Debounce: Wait until the user stops typing before making a request.

- Throttle: Limit how often API calls can be made in a set time frame.

Let me show you an example below:

import { useState, useCallback } from "react";

import { debounce } from "lodash";

const SearchAI = () => {

const [query, setQuery] = useState("");

const fetchAIResults = useCallback(

debounce((input) => {

console.log("Fetching AI results for:", input);

// Call AI API here

}, 500),

[]

);

return (

<input

type="text"

onChange={(e) => {

setQuery(e.target.value);

fetchAIResults(e.target.value);

}}

value={query}

placeholder="Search AI..."

/>

);

};

Using Web Workers for Resource-Intensive AI Tasks

Running AI models directly in the browser (e.g., TensorFlow.js, ONNX.js) can block the main UI thread, which is a problem because it can cause lag and poor responsiveness. A simple solution is to offload intensive AI computations to Web Workers.

Why do I recommend using Web Workers?

- Web Workers can help run AI tasks in the background, keeping the UI responsive.

- Worker Threads (Node.js) can handle parallel AI computations on the server, improving scalability and performance.

Need to add AI to your React app? Our ClickIT developers make your application smarter in no time. Talk to our AI Experts.

Security and Deployment

Although integrating AI into a React application could lead to cool features, it also comes with security, privacy, ethical concerns, and sometimes deployment challenges.

AI-powered apps often deal with user inputs, personal data, and API keys, making security and compliance non-negotiable. If you’re not careful, you could have leaked keys, exposed user data, or model biases, leading to unfair decisions. You may even run into compliance issues with laws like GDPR and CCPA. Make sure you’re familiar with all the ethics and compliance measures of SaaS applications.

Below, I’ll explain how to keep your AI-powered app secure, compliant, and production-ready.

Protecting API Keys and User Data

AI APIs (like OpenAI, Google Cloud AI, and Hugging Face) require authentication keys. If these keys get exposed, bad actors could steal them, rack up expensive API charges, or abuse your app.

If you accidentally hardcode them in your React app, congratulations, you just gave everyone on the internet free access to your API! React users can access a client-side library, so anything stored on our front end should never hardcode API keys inside our React code. In this case, anyone who checks your source code can grab your key.

Here are some best practices to protect API keys:

- Use Environment Variables: Store keys in a .env file. Then, access it in React. I should mention that even with .env files, variables in a frontend React app get exposed during the build process. So, here are some more measures below:

- Use a Proxy : If you don’t want to set up a full backend, you can use a serverless function on Vercel or Netlify as a lightweight proxy.

- Keep API Calls in the Backend: Instead of making AI requests from the front end, use a Node.js/Express server to handle them securely.

- Authentication Tokens Instead of API Keys: Some APIs let you use OAuth or JWT (JSON Web Tokens), so users authenticate and get temporary access tokens instead of needing a hardcoded API key.

Ensuring User Data Privacy & Compliance

Privacy laws like GDPR (Europe) and CCPA (California) exist for a reason—AI-powered apps process user data, and mismanaging it can lead to legal trouble (and, unfortunately, a loss of user trust). You could even get severe fines!

Here are a few tips to ensure user data privacy and compliance:

- Only collect what’s necessary : Only store essential user information—don’t hoard data you don’t need.

- Anonymize sensitive information: If you store AI-generated user insights, remove identifiable details.

- Allow users to oopt out Transparency is key. Let users control their data and request deletion if needed.

Auditing AI for Bias

While it’s great to know how to integrate AI into a React Application, artificial intelligence is not perfect. At least not yet as I write this. AI learns from datasets and if those datasets contain bias (which happens often), your app AI could produce unfair or discriminatory results.

For example, if I built a React App with AI features to analyze resumes, approve loans, or generate recommendations, I’d need to audit the model to prevent unfair decisions. Here’s what I would do to keep my app from bias:

- Regularly audit AI models for biases and unintended behavior.

- Test with diverse user groups to catch potential issues.

- Allow user feedback to flag incorrect or unfair AI responses.

Deploying an AI-powered React App

Once your React App with AI is ready, it’s time to ship to production and let the world see the magic we’ve built. But where should you deploy it? AI features may sometimes have additional hosting and scaling requirements, especially when running server-side AI logic or handling high API request volumes.

Choosing a Hosting Platform: Here’s how I see the hosting platform options you should consider:

- Vercel – Best for seamless deployment, automatic scaling, built-in CI/CD, and serverless functions.

- Netlify – Similar to Vercel but slightly more focused on static sites, with built-in CI/CD, serverless functions, and API handling.

- AWS Amplify – A full-stack solution with hosting, authentication, and backend AI services, best suited for apps that need deep AWS integration. This one may have a steeper learning curve.

In my opinion, Vercel is the fastest way to get it online.

Scaling AI-Powered Features

AI can be resource-intensive, and as your user base grows, you’ll need to scale your AI features efficiently.

- Use Edge Functions: Services like Vercel Edge Functions process AI requests faster by running computations closer to users. However, for compute-heavy AI tasks, GPU-backed cloud instances (like AWS Lambda with GPU, RunPod, or dedicated AI APIs) are better.

- Optimize AI Calls: Reduce API costs by caching responses, batching requests, and using Web Workers for heavy processing.

- Implement Rate Limiting: Prevent API abuse by limiting requests per user/IP.

FAQs on How to Integrate AI into a React Application

An AI-powered React application can integrate features like real-time data processing, automated content generation, AI-driven image and video recognition, personalized recommendations, and natural language processing (e.g., voice assistants, chatbots, and sentiment analysis).

To integrate OpenAI’s API into a React application, obtain an API key from OpenAI and store it securely using backend environment variables. Set up an API route (e.g., an Express server, Vercel/Netlify serverless function) to handle requests. The frontend sends user input to this backend, which forwards the request to OpenAI’s API and returns the response.

TensorFlow.js enables AI inference to run in the browser, supporting tasks like image recognition, object detection, and speech analysis. Pre-trained models can be integrated into React apps for real-time processing without a backend, reducing latency and improving privacy while enabling offline functionality.

An AI-powered React application can be deployed on platforms like Vercel, Netlify, AWS Amplify, or Render. If the application relies on a backend for AI processing, services like AWS Lambda, Firebase Functions, or an Express.js server on Render or Railway can manage API calls securely.